Real-time audio and video in the metaverse

In the last couple years, many exciting metaverse projects have started with visions to connect us through immersive, spatial environments; closer to how we connect with others in the physical world. A unifying principle among them is accessibility: a truly open metaverse should run in a browser, agnostic to hardware or operating system. One upfront challenge is how to build a 3d, graphically-intensive virtual world that runs at 60fps across Chrome, Firefox and Safari. Babylon and Three are wonderful tools, but feel incomplete in areas like physics or multiplayer support. Developers have coalesced around Unity, which supports a broad list of platforms including a WebGL compilation target.

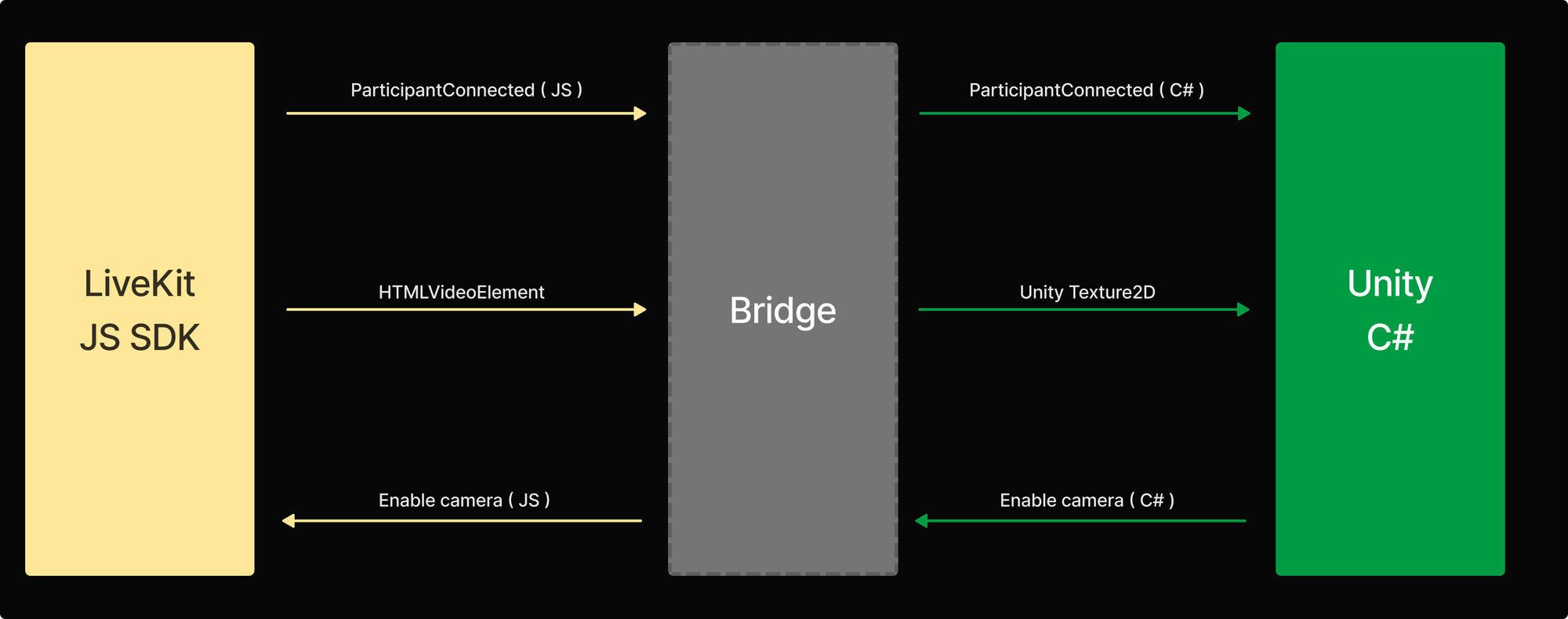

We spoke to a few developers of browser-based Unity applications, who built real-time audio and video features to help their users connect and interact. We learned they manage game state within the Unity/C# execution environment and write separate JavaScript (JS) code to leverage the browser's WebRTC (libwebrtc) implementation for audio and video. Messages about player state are passed from Unity to JS via bridge and video frames travel from JS to Unity–where they are converted to a 2d texture and applied to a game object–using the same mechanism. Sometimes overlay menus are implemented in C# with events triggered in JS, other times, the reverse! 😵💫

We wanted to give Unity developers targeting WebGL a seamless way to integrate real-time audio and video into their applications.

LiveKit's Unity WebGL SDK

There were two major goals when we sat down to design our Unity WebGL SDK:

- Leverage our battle-tested JavaScript SDK.

- Help developers avoid spaghetti code. 🍝

The first one was easy: since a developer's application is running in a browser, our Unity package can use Webpack to bundle in our JavaScript SDK. Then, the question became, how could we give developers access to it without leaving C#?

Interoperability with JavaScript

To grant Unity access to the browser's built-in WebRTC support, we wrote a bridge between C# and JavaScript.

Each class from our JS SDK has an equivalent wrapper class in our Unity SDK. Here's an example snippet from the RemoteParticipant C# wrapper:

public class RemoteParticipant : JSRef

{

[Preserve]

internal RemoteParticipant(JSHandle ptr) : base(ptr)

{

}

public void SetVolume(float volume)

{

JSNative.PushNumber(volume);

JSNative.CallMethod(NativePtr, "setVolume");

}

public float GetVolume()

{

return (float) JSNative.GetNumber(JSNative.CallMethod(NativePtr, "getVolume"));

}

// ...

}Note that shadowed C# methods don't directly bridge their JS analogue. Instead, we construct a message containing the target method name and associated parameters and pass it through a single bridged function: CallMethod. This technique yields a minimal bridge interface and saves writing lots of JavaScript glue code.

In general, we maintain state on the JS side, since objects in C# may be garbage-collected at any time if the application developer doesn't hold a reference. The exception here are objects which bind to events; in this case, our (Unity) SDK maintains references for event listeners and does its own reference counting.

Bridging textures from the browser to Unity

A particularly interesting (and fun!) challenge was getting WebRTC video frames into Unity for mapping onto game objects. Unfortunately, web browsers don't provide direct access to a native video handle. To move a video frame from an HTMLVideoElement to Unity's Texture2D, we needed to copy it to the GPU as a WebGL texture.

glTexSubImage2d seemed up to the task, but we went with glTexImage2d. Despite the latter doing extra work, like allocating storage and generating size and format definitions, our benchmarks indicated glTexImage2d ran faster (sometimes, considerably so) than glTexSubImage2d. We suspect this is because glTexSubImage2d lacks intra-GPU copy optimizations.

A final hurdle: Unity's Texture2D is immutable, so we couldn't simply populate one by calling glTexImage2d from JavaScript. Instead, we create the texture in JavaScript and bind to it from Unity via Texture2D.CreateExternalTexture:

var tex = GLctx.createTexture();

var id = GL.getNewId(GL.textures);

tex.name = id;

/* Add to Unity's textures array (to be used with

* Texture2D.CreateExternalTexture on the C# side) */

GL.textures[id] = tex; Bridging data channels

Some developers also use LiveKit's data channels to transmit arbitrary information like pub/sub events or chat messages. Since byte arrays are blittable types in C#, moving data channel packets to Unity is relatively straightforward.

In JavaScript, Emscripten’s heap is represented using an ArrayBuffer with different views (HEAPU8, HEAPU16, etc). To get data from JavaScript to C#, we must first copy it to heap memory:

JSNative.PushString("byteLength");

var length = (int) JSNative.GetNumber(JSNative.GetProperty(NativePtr));

var buff = new byte[length];

JSNative.CopyData(NativePtr, buff, 0, length);CopyData: function(ptr, buff, offset, count) {

var value = LKBridge.Data.get(ptr);

var arr = new Uint8Array(value, offset, count);

HEAPU8.set(arr, buff);

}However, to transmit the other direction–from C# to JavaScript–copying is unnecessary:

[DllImport("__Internal")]

internal static extern void PushData(byte[] data, int offset, int size);PushData: function (data, offset, size) {

var of = data + offset;

LKBridge.Stack.push(HEAPU8.subarray(of, of + size));

}Using LiveKit to add video to Unity games and apps

Our Unity WebGL SDK supports writing both C# and/or JavaScript code. We designed it to be flexible since some developers have existing UI components in JavaScript.

Here’s a quick example of how to connect to a LiveKit Room and publish your webcam and mic from Unity:

IEnumerator Start()

{

var room = new Room();

var connOp = room.Connect("<livekit-ws>", "<participant-token>");

yield return connOp;

room.TrackSubscribed += (track, publication, participant) =>

{

if(track.Kind != TrackKind.Video)

return;

var video = track.Attach() as HTMLVideoElement;

video.VideoReceived += tex =>

{

// tex is a Texture2D that you can use anywhere

};

};

yield return room.LocalParticipant.EnableCameraAndMicrophone();

}If you want to build your UI in React, you can easily mix C# and JavaScript. Here's a (TypeScript) snippet where a user can mute their microphone from JavaScript, while in a room created by C#:

import { UnityBridge, UnityEvent } from '@livekit/livekit-unity';

import { Room } from 'livekit-client';

var room: Room;

UnityBridge.instance.on(UnityEvent.RoomCreated, async (r) => {

room = r;

});

// Mute the microphone by clicking the button

var muteBtn = document.getElementById('mutebtn');

muteBtn!.addEventListener('click', async () => {

await room.localParticipant.setMicrophoneEnabled(false);

});See the SDK in action!

Unity demo in action. Try it yourself at https://unity.livekit.io

Paying homage to the classic Unity multiplayer demo, we put together a sample application to show the power of our Unity WebGL SDK. Since video frames are ordinary Unity textures, we chose to render each player's camera feed as cyberpunk-style, interlaced video. 😎

Our demo also features rudimentary spatial audio so you can talk to nearby players. All game state is managed through data channels using LiveKit's SFU, obviating the need for a separate game server (though, we recommend you use one for production applications).

Bridging the Unity and JavaScript runtimes was a lot of work, but worth the effort. We're excited about a future where the Web is filled with expansive virtual worlds and rich 3d spaces to explore. These experiences will be even more fun to explore together, with others. Our SDK provides a turnkey solution for adding real-time audio and video presence to any Unity WebGL-targeted application. We can't wait to see what you build with it!

Find the LiveKit Unity WebGL SDK on GitHub.

As always, if you have any questions, thoughts or suggestions our LiveKit Developer Community is a warm and friendly place to hang out. 🤗