A tale of two protocols: comparing WebRTC against HLS for live streaming

Do you remember the last time you watched a WWDC keynote? Did you watch it live? Did you read tweets about something you hadn't seen yet and wonder why?

The answer is that Apple live streams these events using HLS, just like most other streaming video services, including those streaming football games and presidential debates. Unlike live TV broadcasts, where every viewer sees the same video at the same time, HLS has variable latency. That means every viewer watching the same video has a slightly different time delay. You might have seen Tim Cook unveil the Apple Vision Pro a full minute before or after someone else.

In this post, we'll explore how HLS works, why it has variable latency, and how it differs from a newer live streaming technology which eliminates variable latency altogether: server-mediated WebRTC.

The inner workings of live streaming technology

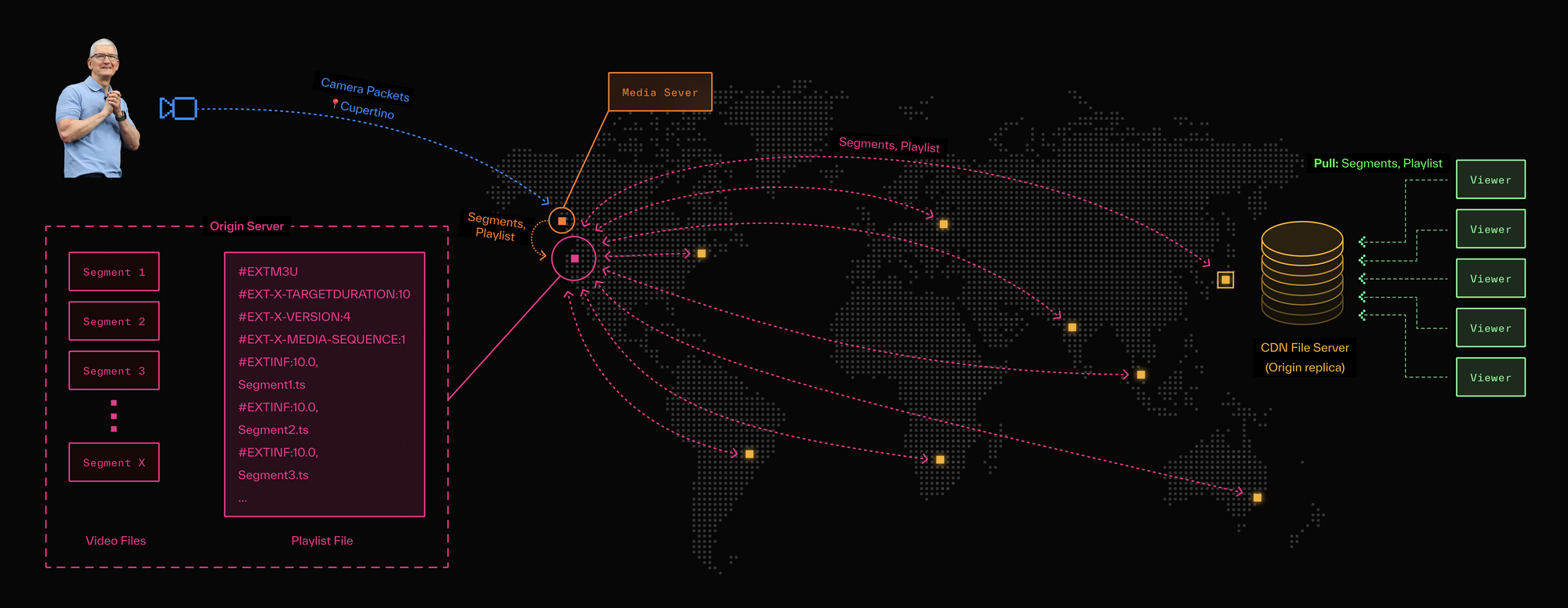

An Apple keynote usually opens with a camera facing Tim Cook in Steve Jobs Theater. Under the hood, that camera is constantly streaming video packets to a media server (or sometimes, studio software running locally).

In the case of HLS, that media server collects these packets and runs them through an encoding pipeline to generate segments; each one typically a six-second video file. A copy of each segment and a “playlist” file is then uploaded to an origin server and downloaded by file servers around the world (i.e. a CDN). The playlist is simply a text file listing the order in which the video files should be played through — not so dissimilar from a Spotify playlist or album.

For the duration of the keynote, video packets are continuously uploaded, video files created, playlist updated, and both video files and playlist downloaded by globally-distributed file servers.

When you (or I) navigate to a webpage to watch the keynote, an HLS player (i.e. client) connects to the geographically closest file server, downloads the playlist file, finds a recent entry (denoting a recently recorded segment) and starts downloading and playing it. As new video files are recorded and the corresponding playlist is updated, those segments will be fetched by your client in the background and added to your local buffer for playback.

HLS is built on the stateless HTTP-based delivery infrastructure we’ve had since the 1990s. In a nutshell, the streamer uploads video files to a shared folder while the viewers poll the same folder for new files and download them as they appear. When this exchange is done without network issues, it gives the appearance of watching a live stream.

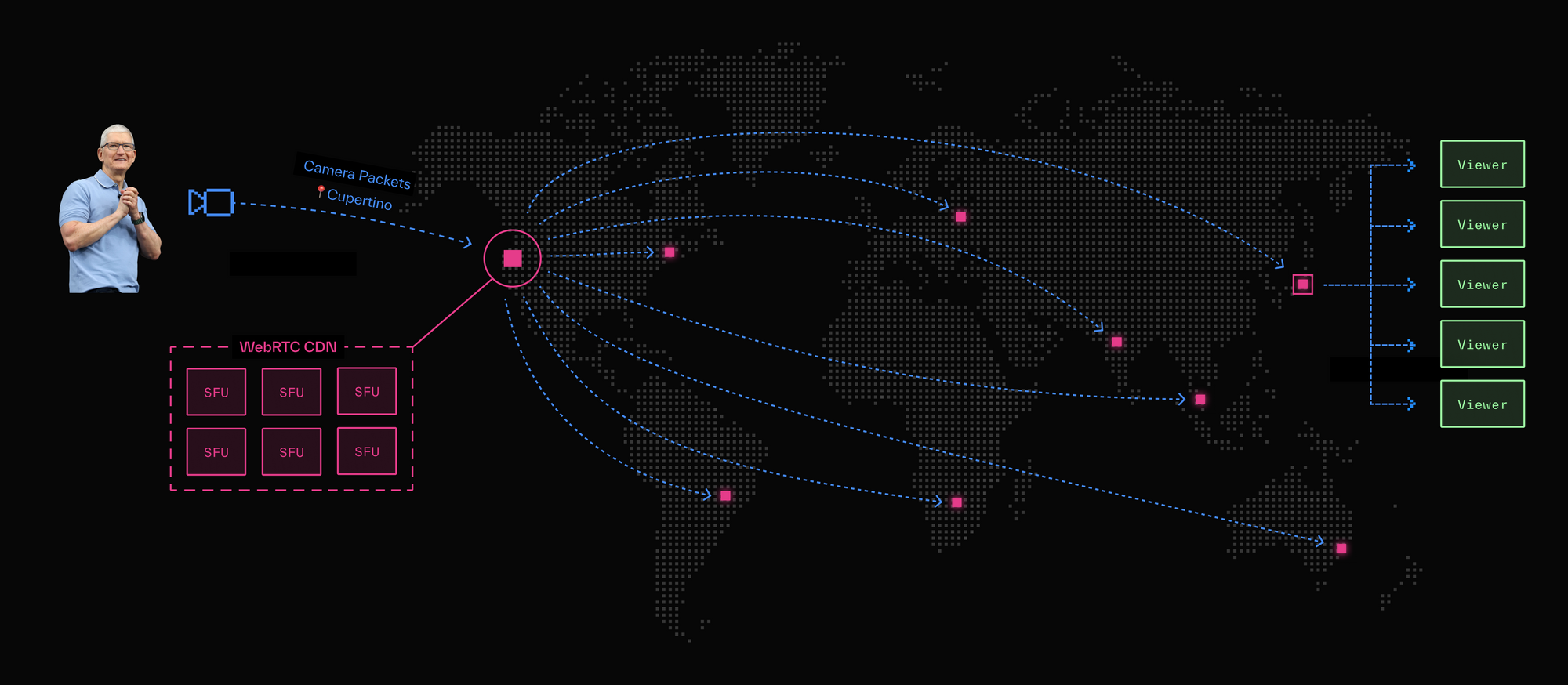

WebRTC relies on the more recent introduction of UDP sockets in browsers. In contrast to HLS, a WebRTC media server acts as a stateful router: a session or dedicated network path is first established between streamers and viewers, then media flows between them. If an Apple keynote used WebRTC, copies of video packets hot-off-the-camera are pushed directly to viewers by the media server.

A key difference between these two approaches is: with HLS, the clients (both streamer and viewers) are in control and operate independently of one another. With WebRTC, while the media server (”SFU”) and clients have a tight coordination loop, the server is largely in control.

In the next section, we will explore several ways in which this difference in design becomes apparent.

The tradeoffs between HLS and WebRTC

Latency

One of first things developers evaluate is protocol overhead or, the latency under ideal network conditions.

Due to the encoding of packets into files and the client-driven fetching of segments over TCP, it’s no surprise that HLS has higher latency than WebRTC. HLS has a baseline latency of 5-30 seconds. There are a few variants of HLS, like LL-HLS and Periscope’s LHLS (coauthored by our very own, Benjamin Pracht), designed to reduce latency through techniques such as shorter segment lengths and delivering partial segments, but even with those, latency remains between 2-5 seconds.

WebRTC minimally interferes with media packets and pushes them as fast as possible between sender and receiver. The result is an upper bound end-to-end latency of 300ms. Over optimized networks it can even achieve speed-of-light transmission (66ms between the longest arcs over Earth).

Adaptability

Like the road system, network conditions are rarely ideal. So we have to investigate how these protocols handle issues like congestion and low bandwidth, packet loss and jitter.

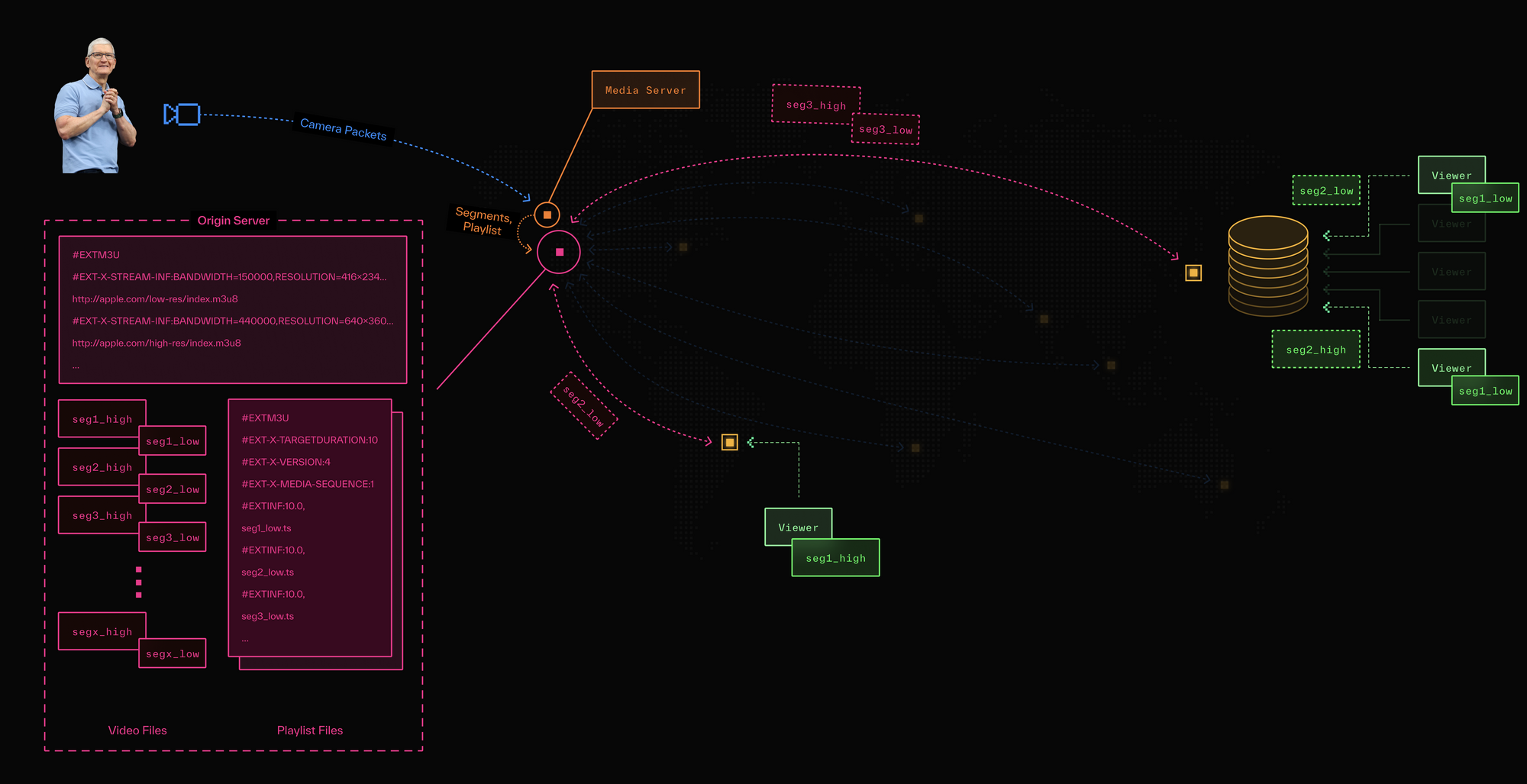

One common technique for dealing with congestion or low bandwidth is adaptive bitrate (ABR) streaming. In the context of live streaming, ABR is an answer to the question of: how can we dynamically deliver lower quality video when the viewer’s network is poor and higher quality video when it’s good?

HLS supports ABR on the streamer side by having the media server’s encoding pipeline generate multiple versions (i.e. files) of the same video data encoded at different bitrates. Each version has its own playlist file, and a new multivariant playlist serves as an index, pointing to each version-specific playlist. The client (i.e. HLS player), however, is responsible for selecting the appropriate version of the next segment to retrieve during the polling interval. With segment lengths being typically six or more seconds, HLS might react slowly to changes in network conditions. If this occurs, where a player continues to fetch a version with a higher bitrate than a viewer's connection can accommodate, the stream may pause to buffer.

If we shorten the segment length as with LL-HLS, there are still challenges posed by the underlying HTTP transport. TCP doesn’t expose network congestion to the application layer, which makes it difficult for a client to accurately measure available bandwidth. So even if we shorten the polling interval and theoretically give LL-HLS more opportunities to switch bitrates, the limited visibility provided by the transport layer means a client may still remain at a suboptimal quality level for longer than necessary.

TCP doesn’t expose packet loss either. Once a segment is being downloaded, an HLS player has to either, wait for the entire segment to be transferred—potentially buffering during retransmissions—cancel the fetch and switch to a lower quality version, or skip to the next segment. Heuristics vary based on the player implementation but in all cases, the data used to decide on a strategy is abstracted from what’s happening at the network level.

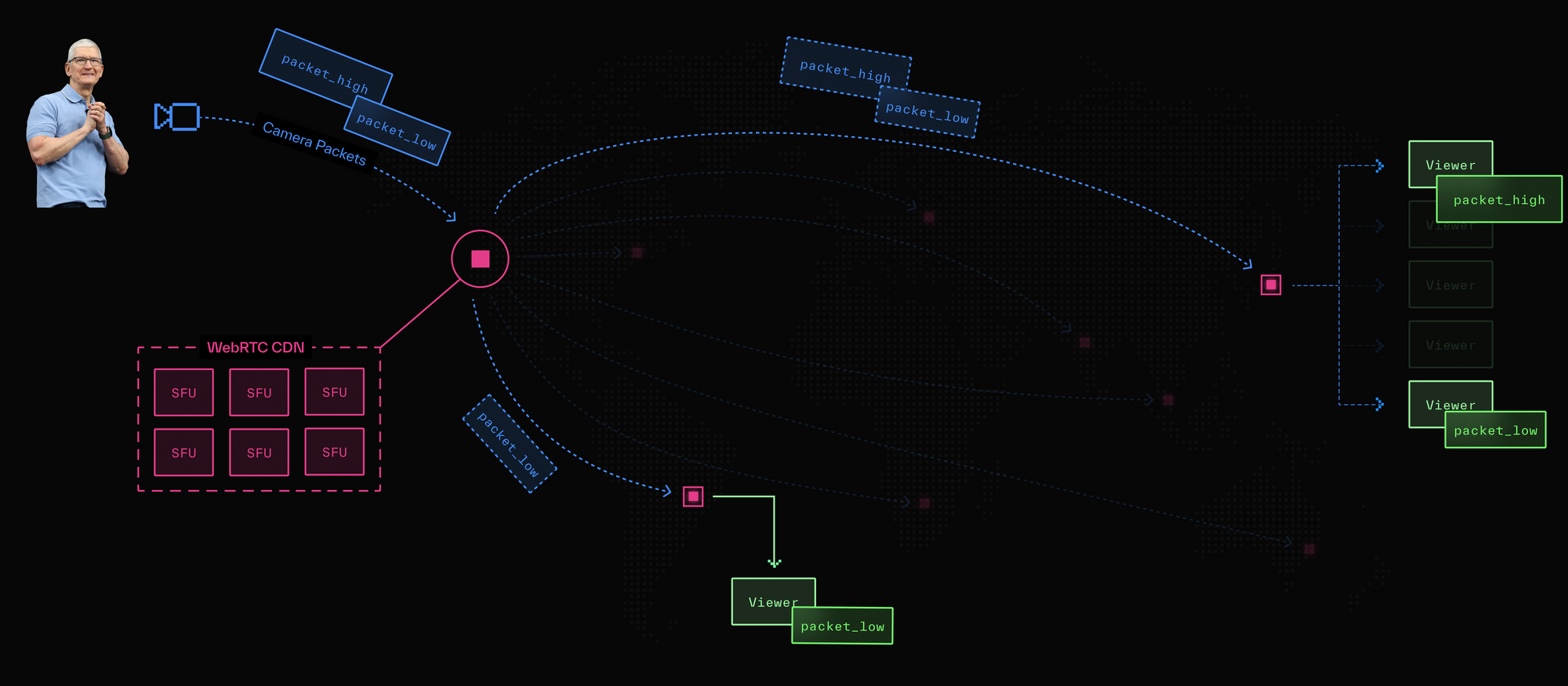

WebRTC uses a method called simulcast to support ABR. Unlike HLS, the streamer themselves publishes multiple versions of their video data at different bitrates. The (media) server is then responsible for choosing the appropriate version of the stream to deliver to each client. Since video data is represented by packets and not segments, an SFU can dynamically switch stream versions (simulcast “layers”) on the fly.

WebRTC's packet-based delivery and use of UDP give it greater visibility and control over the transport layer. If video data arrives late or not at all, the server has the option of retransmission or to simply move on to newer content. WebRTC also includes a suite of congestion control algorithms, such as TWCC, that enable real-time estimation of a link's bandwidth capabilities.

When a client's network is congested, modern codecs such as AV1 and VP9 support scalable video coding (SVC). This allows the SFU to immediately reduce resolution, frame rate, or both by skipping packets, thereby dropping that client's bandwidth requirements. While HLS does support HEVC (i.e. H.265), it does not currently support SVC codecs.

Synchronization

Synchronization, as we mentioned at the start of this post, is probably the most important yet most overlooked difference between HLS and WebRTC.

The problem with HLS is each client is in control of the viewing experience. The segment selection, buffer size, polling interval, layer switching, acceptable data rate — all of it is governed by the HLS player. This means that skew between viewers is an inevitability; we’re never watching the same thing at the same time. Sometimes we may be only a couple seconds apart, other times thirty seconds or a minute.

When building a truly shared user experience, this just doesn’t work. For instance, it is common for friends to watch and discuss the same live event together, only to discover that someone has inadvertently shared spoilers before others see them happen.

Through a combination of an SFU controlling what each viewer sees and when, and a typical client-side buffer size of 100ms or less (intended for smoothing out jitter), viewers of a WebRTC-based live stream effectively see the same thing at the same time.

The need for synchronization ultimately depends on the application you’re building. It’s critical for use cases that depend on audience interaction, such as live shopping or watch parties. It’s less important for "one-way" broadcasts, but even then, your viewers may be discussing your content together in your application via, for example, live chat, or in other third-party applications. Therefore, having everyone on the same page (packet? 😉) leads to, at a minimum, a better UX, and at best, increased engagement or virality.

Scale

CDN infrastructure has been optimized over multiple decades to serve millions of concurrent requests for files — everything from images to JavaScript. Given that HLS piggybacks on a CDN for delivery, an HLS-based live stream can handle millions of concurrent viewers. However, it's important to note that there's no inherent scaling advantage built into the HLS protocol itself.

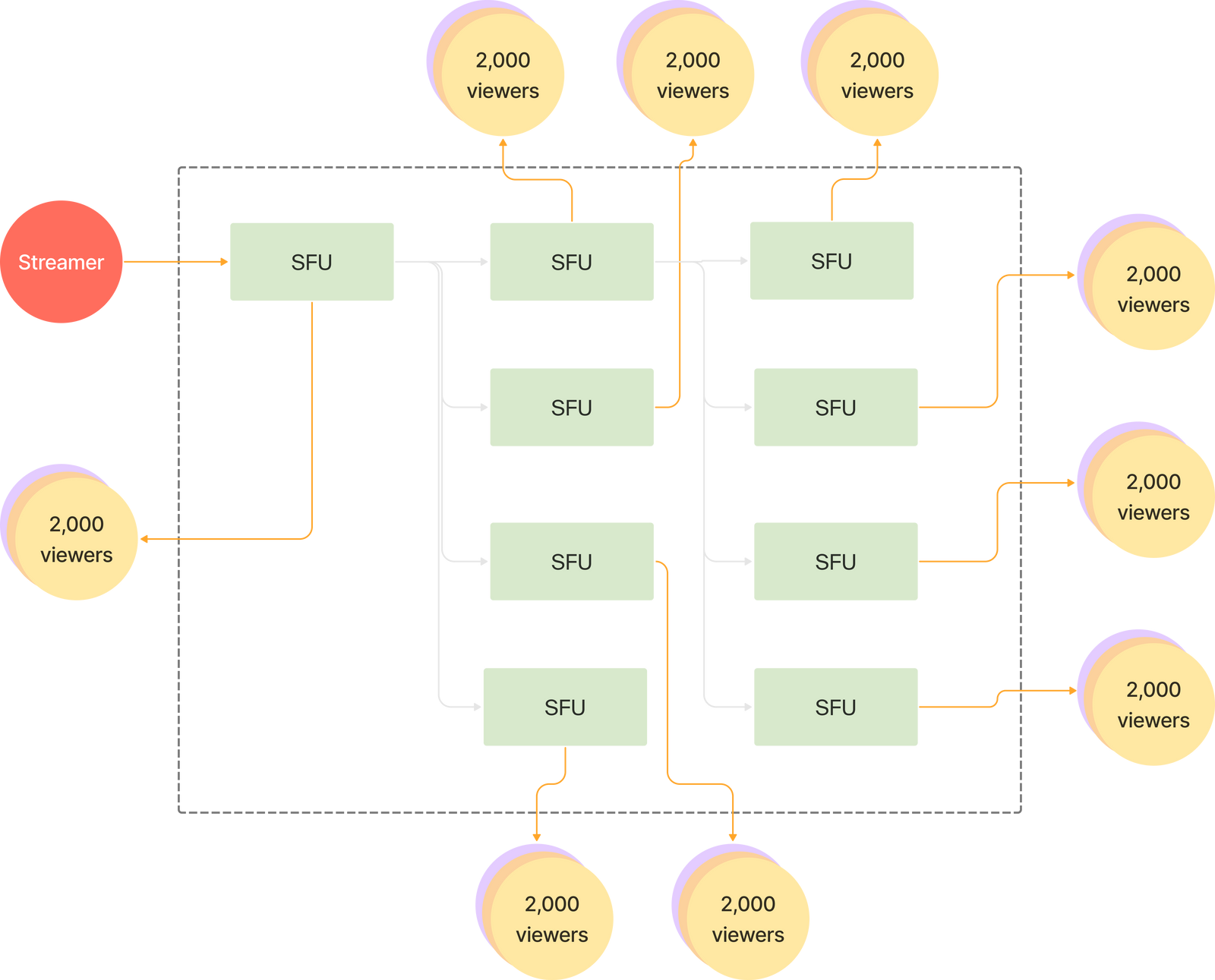

While WebRTC works with packets instead of files, we can still employ CDN-like scaling techniques. For example, packet data can be duplicated and relayed across SFUs and data centers. Viewers of a WebRTC-based live stream connect to and stream packets from their geographically-closest media server. This architecture, modeled on the principles of a CDN can also handle millions of concurrent viewers.

Functionality

There are clear tradeoffs between HLS and WebRTC that dictate what you can do with each technology.

Audience interaction

HLS is a unidirectional protocol; a streamer’s video is captured and written to files hosted on a CDN. To a viewer, these files are read-only, which means they can’t interact or communicate with the streamer. Applications like Twitch end up building a separate chat feature using WebRTC or WebSockets.

WebRTC is designed for full-duplex communication. In a live stream, any viewer can instantly become another streamer by publishing their camera, microphone, or sharing their screen. The protocol also supports data channels for sending any type of data between users in a session and is frequently used for building live chat functionality.

Streamer setup

To broadcast using HLS, you usually need to run studio software to convert camera pixels into an RTMP stream, as well as a media server to transcode and segment the video into files. This pipeline has many moving parts, and while some desktop and mobile apps have made it easier, it’s not as simple as going live with WebRTC. WebRTC is available in virtually every modern browser and going live is only a few lines of JavaScript.

VOD

Since HLS writes video segments to files hosted on a CDN, it provides VOD (replay and rewind capabilities) for free. An HLS player can start playback from the first (replay) or any other segment (rewind) in the playlist file.

To achieve something similar with WebRTC, you need to run a process similar to HLS concurrently. This directs live viewers to your WebRTC application, while VOD viewers are directed elsewhere. Last year, we launched Egress to make it easy to run a WebRTC-based live stream and simultaneously record and/or stream it out to third-party services like Twitch, YouTube/Facebook Live, and more. You can read about the launch here.

Cost

Transmitting a certain amount of data over the internet costs a certain amount of money.

Video frames contain a lot of data, and while different codecs may be used to compress video more efficiently before transfer, HLS and WebRTC only define how to transfer that video. Relative to the actual video frames, neither protocol adds an appreciable amount of data to the overall payload we need to transport. So fundamentally, whether you’re using HLS or WebRTC, it should cost roughly the same. If you were to self-host HLS video files or manage your own WebRTC server, your costs would primarily consist of bandwidth charges from your underlying cloud provider.

Being a more established (i.e. commoditized) technology—most of the big cloud providers themselves offer integrated CDN services—HLS is generally the cheapest option for live streaming. As a newer technology initially designed for video conferencing, WebRTC is expensive and a lack of competition has lead most WebRTC providers to charge the same high prices.

We (LiveKit) believe WebRTC is the future of live streaming. In that spirit, we've moved away from the industry standard per-minute pricing model and chosen to charge based on bandwidth usage, similar to a cloud provider. This aligns our interests with yours: when we find ways to operate our network more efficiently, those savings are passed on to you.

For example, next-generation codecs such as VP9 and AV1 can decrease bandwidth usage by an average of 15% and 40%, respectively. When we use AV1 to transmit your application’s data, we pay the cloud provider 40% less, and you pay us 40% less. Here’s a quick comparison of how we stack up against popular HLS-based live streaming solutions:

| Service | LiveKit | Cloudflare | Amazon IVS |

| Type | WebRTC CDN | HLS | HLS |

| Unit Cost | $0.12/GB* | $1/1000 watched minutes | $0.075/hour |

| Total Cost | $912 | $600 | $750 |

| Codec | LiveKit H.264 | LiveKit VP9 | LiveKit AV1 |

| Unit Cost | $0.12/GB | $0.12/GB | $0.12/GB |

| Bandwidth Usage | 7.65 TB | 5.35 TB | 4.59 TB |

| Total Cost | $912 | $642 | $551 |

Reviewing your options

Without a doubt, HLS is the battle-tested technology for live streaming to large audiences. It can scale to millions of viewers, and in a cost-effective manner. But when it comes to building truly shared, live experiences, HLS is not the right tool for the job.

“Shared” might mean where members of the audience can dynamically interact with streamers such as in live shopping, podcasts, or gaming applications. It also could be simply watching a movie or sporting event together with your friends around the world.

WebRTC's ultra-low latency, full-duplex communication, and cross-viewer synchronization make it ideal for building experiences like these. The two issues historically preventing it from being used for this purpose have been lack of scale and high costs.

The recent emergence of WebRTC CDNs has solved the scalability challenge by applying scaling techniques from traditional CDNs to edge-based packet servers. With LiveKit Cloud’s shift to a bandwidth-based pricing model, we’ve brought the cost of using WebRTC for large-scale live streaming in line with HLS. The use of next-generation SVC codecs will bring that cost significantly lower.

| HLS | LL-HLS | WebRTC Server | WebRTC CDN | |

|---|---|---|---|---|

| Latency | 10+ seconds | 2-3 seconds | 300ms | 300ms |

| Scale | Millions of viewers | Millions of viewers | < 1k viewers | Millions of viewers |

| Cost | $0.06 / hour | $0.08 / hour | $0.24 / hour | $0.05-$0.13 / hour (depending on volume and bitrate) |

| Adaptive bitrate (ABR) | ✅ | ✅ | ✅ | ✅ |

| Scalable video coding (SVC) | ❌ | ❌ | ✅ | ✅ |

| Viewer synchronization | ❌ | ❌ | ✅ | ✅ |

| Interactivity | ❌ | ❌ | ✅ | ✅ |

| Multiple publishers | ❌ | ❌ | ✅ | ✅ |

With all the advantages of HLS now part of WebRTC, you can build experiences that a few years ago weren’t possible. If you’re building something new, looking to add multiplayer features to your existing live streaming application, or reevaluating your current HLS-based setup, don’t sleep on WebRTC.

We love to chat with folks about ideas and code. If you’re into that, come say 👋 in our Slack community.