Universal Egress

WebRTC–a low latency protocol with ubiquitous support across devices–is fantastic for last-mile delivery, but can't address every need a developer has when working with audio and video. An application may want to do things like store a session for future playback, relay a stream to a CDN, or process a track through a transcription service – workflows where media travels through a different system or protocol.

Unfortunately, WebRTC doesn't offer this flexibility; it was designed for fast, direct user-to-user transmission of media. So today, we're unveiling a new set of tools and APIs which simplify exporting audio and video from LiveKit.

We call it: Universal Egress.

What can you do with Universal Egress?

Using our server SDK or CLI, LiveKit's egress service allows you to export media from any open room. There are three options available:

- Room composite for exporting an entire room.

- Track composite for exporting synchronized tracks of a single participant.

- Track egress for exporting individual tracks.

Irrespective of method used, when moving between protocols, containers or encodings, LiveKit's egress service will automatically transcode streams for you.

Room composite

Using a prebuilt or custom template for track layout, you can export an entire room's media using Room Composite egress. Exported media arrives in the form of a file–automatically uploaded to a specified cloud storage bucket–or RTMP stream.

streamRequest := &livekit.RoomCompositeEgressRequest{

RoomName: "my-room",

Layout: "speaker-dark",

Output: &livekit.RoomCompositeEgressRequest_Stream{

Stream: &livekit.StreamOutput{

Protocol: livekit.StreamProtocol_RTMP,

Urls: []string{"rtmp://live.twitch.tv/app/<stream-key>"},

},

},

}

info, err := egressClient.StartRoomCompositeEgress(ctx, streamRequest)

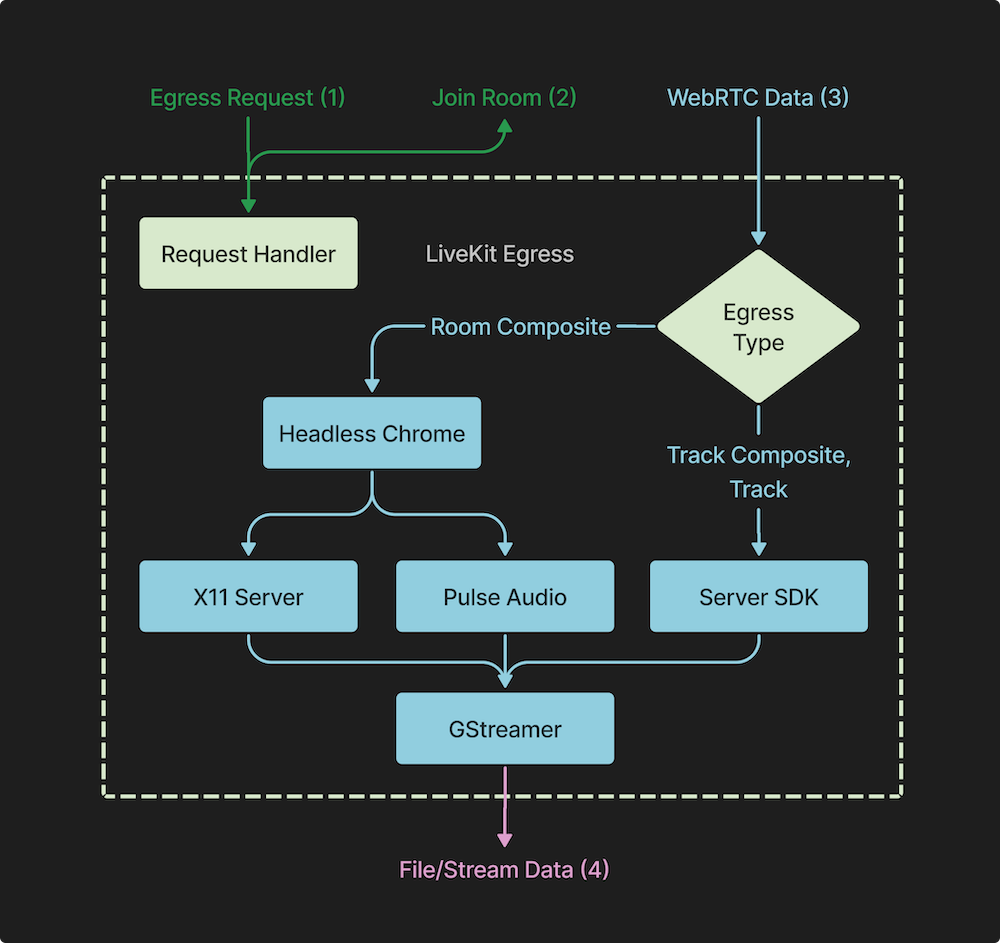

streamEgressID := info.EgressIdWhen a RoomCompositeEgressRequest is received by the egress service, it will open an instance of Chrome, load the selected template as a web page and connect to the specified LiveKit room (as a hidden participant) via JavaScript. While the room remains open and egress request active, the content area of the browser's window will be captured, encoded and wrapped in the appropriate output format.

Capturing with Room Composite is particularly useful when building a session archive or publishing to livestreaming services like YouTube and Twitch. Our Egress Template SDK even allows your recorder and user-facing product to share the same application code.

Using the browser as a compositor

Before we settled on Chrome to facilitate room composition, we explored FFmpeg and GStreamer. While both–wonderful tools we use in other parts of this stack–support stitching together multiple videos, for this task, their respective APIs proved rigid, verbose and difficult to debug. Furthermore, a developer often wants to record their actual application interface versus a fixed layout, which can't capture the dynamic nature of their application.

Considering a web browser's primary job is composition, and that most developers probably have a user-facing application to record, it was clear we'd get much for "free" using Chrome as a renderer.

We run a headless instance in full-screen mode, on an X server as a virtual display. GStreamer, in turn, captures the entire window which is exported as a single video. Audio capture is similar: the browser shuttles audio to a virtual device created via PulseAudio and GStreamer captures the output.

Track composite

Track Composite automatically synchronizes and combines a single participant's audio and video tracks, and exports them to a standalone file or RTMP stream. This option is handy for recording each participant individually, such as during a live performance.

Track Composite requires you to pass track identifiers for the target audio and video pair to capture. We recommend using our server APIs or Webhooks to easily obtain this information.

roomName := "roomname"

listRes, _ := roomClient.ListParticipants(ctx, &livekit.ListParticipantsRequest{

Room: roomName,

})

tracks := listRes.Participants[0].Tracks

var audioTrack *livekit.TrackInfo

var videoTrack *livekit.TrackInfo

for _, t := range tracks {

if t.Type == livekit.TrackType_VIDEO {

videoTrack = t

} else if t.Type == livekit.TrackType_AUDIO {

audioTrack = t

}

}

info, err := egressClient.StartTrackCompositeEgress(ctx, &livekit.TrackCompositeEgressRequest{

RoomName: roomName,

AudioTrackId: audioTrack.Sid,

VideoTrackId: videoTrack.Sid,

Output: &livekit.TrackCompositeEgressRequest_File{

File: &livekit.EncodedFileOutput{

FileType: livekit.EncodedFileType_MP4,

Filepath: "output/file.mp4",

Output: &livekit.EncodedFileOutput_S3{

S3: &livekit.S3Upload{

AccessKey: "key",

Secret: "secret",

Bucket: "bucket",

Region: "bucket region",

},

},

},

},

})

streamEgressID := info.EgressIdSingle track

Track Egress is for when you need a copy of raw track data. Since there's no transcoding or muxing, this is the most computationally efficient option for exporting tracks. It's useful for raw track archival or processing streams in real-time.

For video, we select the appropriate container format given a track's encoding. For example, a track encoded as VP8 will be exported as an ivf file, while one using H.264 results in an mp4. Opus is the only audio format supported by WebRTC, thus a captured audio track is stored as ogg.

Real-time track processing

Track Egress can also forward a track to another server via WebSocket, enabling features like real-time transcription. At this time, the WebSocket option only supports audio tracks.

egressInfo, err := c.StartTrackEgress(ctx, &livekit.TrackEgressRequest{

RoomName: "room",

TrackId: "trackID",

Output: &livekit.TrackEgressRequest_WebsocketUrl{

WebsocketUrl: "wss://your-server/",

},

})Once a connection is established with your WebSocket server, the egress service will transmit binary frames containing the track's raw PCM (pcm_s16le) audio. If the track's state toggles between muted/unmuted, we'll send text frames with the following JSON:

// when muted

{

"muted": true

}

// when unmuted

{

"muted": false

}Designing the egress service

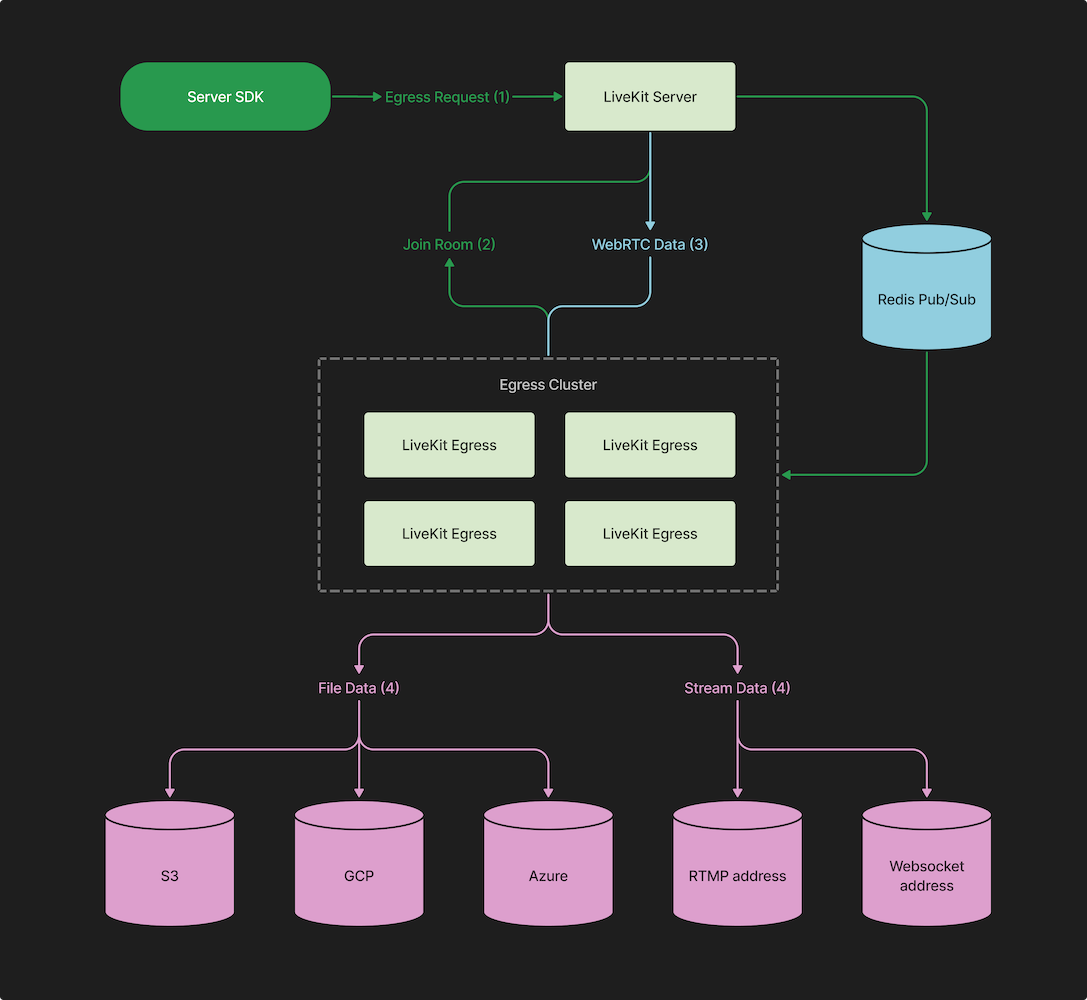

The overriding design goal of the egress system was to keep load off the SFU. Under no circumstances could we impact real-time audio and video performance or quality.

GRPC or HTTP, with typical load balancing schemes, is designed for short-lived, high-throughput requests. A single egress request could last several hours; as long a a room is open. Composite egress requests require transcoding–far more CPU-intensive than a bit-forwarder–nixing any chance of a round-robin load balancing scheme.

So, we built egress as a separate service which uses Redis Pub/Sub to field egress requests and communicate with the SFU. Incoming egress requests are written to a queue observed by a pool of egress workers. A worker may decide to fulfill a request based on its current load. For example, track egress doesn’t involve transcoding and is consequently lighter-weight, whereas room composite runs a full Chrome instance. Granting each worker agency over request fulfillment provides the cluster with flexibility over varying load and queue depth.

After initially evaluating FFmpeg, we chose GStreamer for transcoding and RTMP output. The latter offers greater flexibility, programmatic control via go-gst and most importantly, robust error handling.

After speaking to developers who had worked with RTMP, reliability/stability was an oft repeated concern. Transient connection failures would cause entire video pipelines to die mid-stream. GStreamer lets us catch these errors and decide how to handle them.

Universal Egress is a major step forward on our path to provide an open, flexible stack for real-time media. In the coming months, we'll continue to expand the capabilities of this service – our aim is to build a bridge from WebRTC to any destination speaking any protocol, format or encoding. We hope it serves as a powerful new tool in your arsenal!

You can find LiveKit Egress on GitHub.

As always, if you have any questions or comments, feel free to drop in to our LiveKit Community Slack. We'd love to hear from you, and we're always happy to help with any issues you might encounter!