Using WebRTC + React + WebAudio to create spatial audio

Real-time audio is a critical part of our modern digital lives. It enables us to connect with each other no matter where we are in the world. One of the big recent trends in real-time social applications is spatial audio (sometimes called positional audio).

While traditional audio apps play each participant's voice at the same, balanced, volume in each ear - spatial audio apps play their voices in a way that's modified to account for their direction and distance relative to you in a virtual world. If someone is to the left of you, their audio will be louder in your left ear. If someone is far away from you, their voice will be quieter than someone's who is closer to you. This physically-based audio provides users with immersion and context that's not possible with normal, "phone call"-like audio.

In this tutorial we'll go through the steps of implementing Spatial Audio in a WebRTC, React app. If you're new to WebRTC - that's totally fine! WebRTC stands for Web Real-Time Communication. It's an open standard that modern browsers implement for creating live video, audio, and data functionality. You won't need to do be a WebRTC expert to understand this tutorial but it might help to get familiar with some of the concepts.

Project Setup

This post will primarily focus on the spatial-audio related code - so we won't go over project setup. If you want more depth on the WebRTC project setup, you can look at the complete example app.

The example app uses LiveKit to interact with WebRTC. LiveKit is an open-source WebRTC SFU and set of client libraries for all major platforms. It's the library of choice for 1000s of WebRTC developers, powering millions of WebRTC sessions every day. LiveKit also shares its name with the website you're on ;).

The easiest way to run the example app yourself is LiveKit Cloud. I'll skip the whole marketing spiel, but we made a mini tutorial that shows you what's it like setting up a project.

Architecture

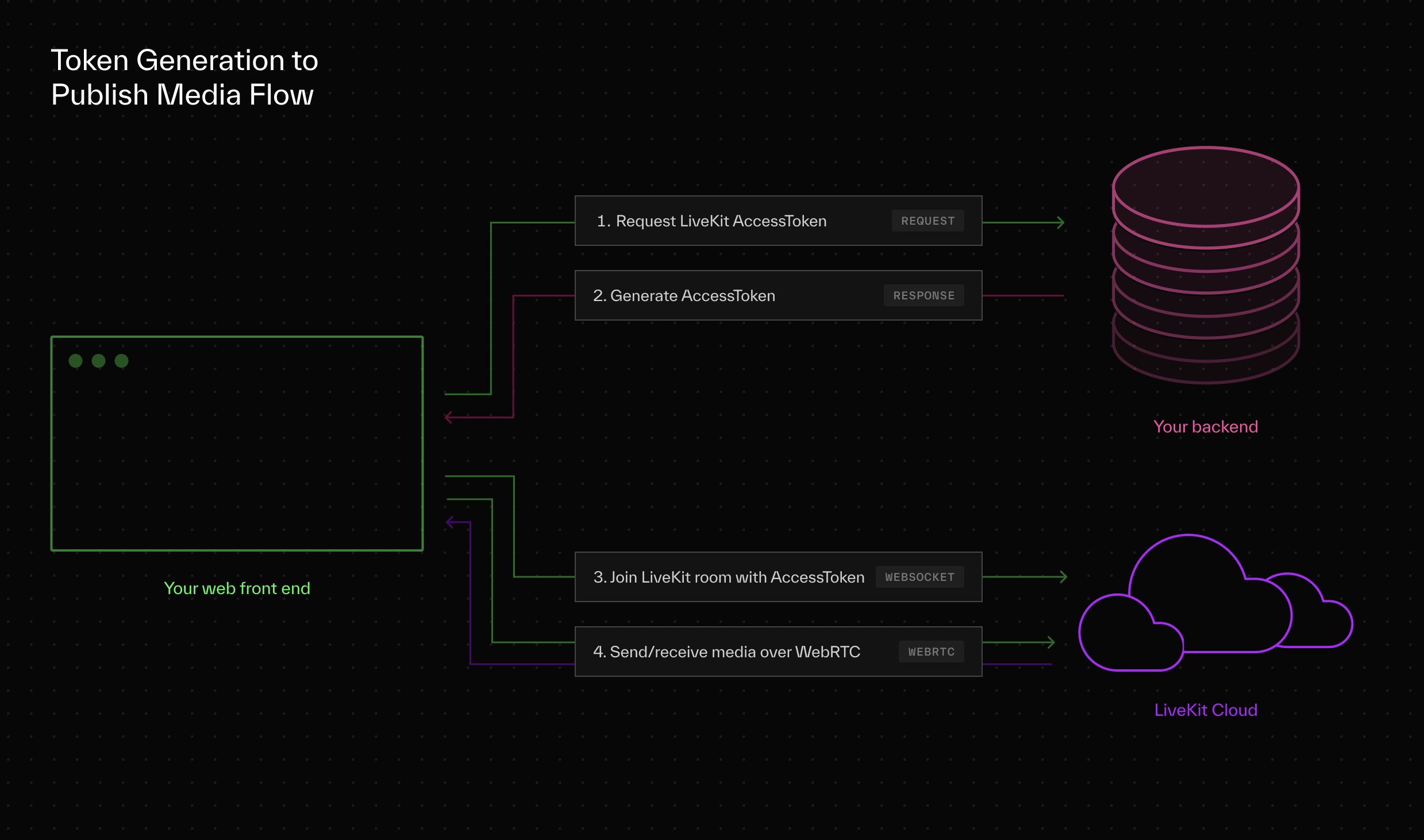

Before jumping into the spatial-audio code, it's worth briefly going over the architecture of the app at a high level.

The app has a frontend component and a backend component. The backend's job is purely for token generation. It is the gatekeeper for who is allowed to connect to a room. The frontend implements the spatial audio and sending microphone and position data to LiveKit Cloud.

Spatial Audio

Great, now that we got all of that covered - we can get into the meat of this post. Implementing the spatial audio functionality.

Designing the API

Let's first think about the problem we're tackling. Spatial audio implies that each participant, whether local or remote, has a position. We'll keep things in 2D for this post but these same concepts are easily extendable to 3D.

(If you have interest in seeing an example app for 3D spatial audio - join our slack and tell us about it.)

So for inputs to the SpatialAudioController - we have an (x,y) for the local user and an (x,y) for every remote user. In LiveKit, users are called Participants - so we could say that each participant has an (x,y).

But we'll actually make our SpatialAudioController a little bit more general because we may not want all of a Participant's audio to be spatial at the same position. For example - if the participant is talking and playing music, we might want the music to have global audio and the microphone to have spatial audio.

So instead of an (x,y) for every participant, we'll use an (x,y) for each spatial TrackPublication and then an (x,y) for your own position.

So here's what our API looks like in code:

type Vector2 = {

x: number;

y: number;

}

type TrackPosition = {

trackPublication: TrackPublication;

position: Vector2;

};

type SpatialAudioControllerProps = {

trackPositions: TrackPosition[];

myPosition: Vector2;

};Using the PannerNode

Great, so we've designed our API - now let's get the SpatialAudioController to render the audio spatially. To do this we'll be using WebAudio, specifically the PannerNode from WebAudio.

The PannerNode takes an (x,y,z) for position as well as an (x,y,z) for orientation and modifies the audio accordingly. Since we're in 2D, we won't use orientation. The other difference between 2D and 3D is the coordinate system. The PannerNode uses y as the vertical component and xz for the planar components. In 2D we only have the planar components so we still map x → x but we'll map y → z.

Note that the PannerNode does not accept 2-positions (local and remote), it only accepts one position. So we'll use math to convert our two positions into a relative position that we can give to the PannerNode. Luckily the math is simple:

const relativePosition = {

x: remotePosition.x - myPosition.x,

y: remotePosition.y - myPosition.y

}Rendering a Single Spatial Audio Track

So with that, let's use the PannerNode and create a component that renders a single track spatially:

type SpatialPublicationRendererProps = {

trackPublication: TrackPublication;

position: { x: number; y: number };

myPosition: { x: number; y: number };

audioContext: AudioContext;

};

function SpatialPublicationRenderer({

trackPublication,

position,

myPosition,

audioContext

}: PublicationRendererProps) {

const audioEl = useRef<HTMLAudioElement | null>(null);

const sourceNode = useRef<MediaStreamAudioSourceNode | null>(null);

const panner = useRef<PannerNode | null>(null);

const [relativePosition, setRelativePosition] = useState<{

x: number;

y: number;

}>({

x: 1000,

y: 1000,

}); // Set as very far away for our initial values

// Get the media stream from the track publication

const mediaStream = useMemo(() => {

if (

trackPublication instanceof LocalTrackPublication &&

trackPublication.track

) {

const mediaStreamTrack = trackPublication.track.mediaStreamTrack;

return new MediaStream([mediaStreamTrack]);

}

return trackPublication.track?.mediaStream || null;

}, [trackPublication]);

// Cleanup function for all of the WebAudio nodes we made

const cleanupWebAudio = useCallback(() => {

if (panner.current) panner.current.disconnect();

if (sourceNode.current) sourceNode.current.disconnect();

panner.current = null;

sourceNode.current = null;

}, []);

// Calculate relative position when position changes

useEffect(() => {

setRelativePosition((prev) => {

return {

x: position.x - myPosition.x,

y: position.y - myPosition.y,

};

});

}, [myPosition.x, myPosition.y, position.x, position.y]);

// Setup panner node for desktop

useEffect(() => {

// Cleanup any other nodes we may have previously created

cleanupWebAudio();

// Early out if we're missing anything

if (!audioEl.current || !trackPublication.track || !mediaStream)

return cleanupWebAudio;

// Create the entry-node into WebAudio.

// This turns our mediaStream into a usable WebAudio node.

sourceNode.current = audioContext.createMediaStreamSource(mediaStream);

// Initialize the PannerNode and its values

panner.current = audioContext.createPanner();

panner.current.coneOuterAngle = 360;

panner.current.coneInnerAngle = 360;

panner.current.positionX.setValueAtTime(1000, 0); // set far away initially so we don't hear it at full volume

panner.current.positionY.setValueAtTime(0, 0);

panner.current.positionZ.setValueAtTime(0, 0);

panner.current.distanceModel = "exponential";

panner.current.coneOuterGain = 1;

panner.current.refDistance = 100;

panner.current.maxDistance = 500;

panner.current.rolloffFactor = 2;

// Connect the nodes to each other

sourceNode.current

.connect(panner.current)

.connect(audioContext.destination);

// Attach the mediaStream to an AudioElement. This is just a

// quirky requirement of WebAudio to get the pipeline to play

// when dealing with MediaStreamAudioSource nodes

audioEl.current.srcObject = mediaStream;

audioEl.current.play();

return cleanupWebAudio;

}, [

panner,

trackPublication.track,

cleanupWebAudio,

audioContext,

trackPublication,

mediaStream,

]);

// Update the PannerNode's position values to our

// calculated relative position.

useEffect(() => {

if (!audioEl.current || !panner.current) return;

panner.current.positionX.setTargetAtTime(relativePosition.x, 0, 0.02);

panner.current.positionZ.setTargetAtTime(relativePosition.y, 0, 0.02);

}, [relativePosition.x, relativePosition.y, panner]);

return (<audio muted={true} ref={audioEl} />);

}So that's it, that's all you need to spatially render a single TrackPublication with spatial audio.

Putting It All Together

We can now fill in the SpatialAudioController to render a list of TrackPublications spatially:

export function SpatialAudioController({

trackPositions,

myPosition,

}: SpatialAudioControllerProps) {

const audioContext = useMemo(() => new AudioContext(), []);

return (

<>

{trackPositions.map((tp) => {

return (

<SpatialPublicationRenderer

key={`${tp.trackPublication.trackSid}`}

trackPublication={tp.trackPublication}

position={tp.position}

myPosition={myPosition}

audioContext={audioContext}

/>

);

})}

</>

);

}The complete spatial audio rendering code for the example app can be found in the SpatialAudioController.tsx file of the example project repo.

Conclusion

In this post we showed you how you can use WebRTC together with WebAudio to create a spatial audio. A full demo using this technique is hosted at spatial-audio-demo.livekit.io that you can mess around with.

We'll be rolling out tutorials for other aspect of this example app shortly, for example, how to send player position data using data channels. In the meantime, check out https://github.com/livekit-examples/spatial-audio for the full example app source code.