Stream music over WebRTC using React and WebAudio

With technology built into every modern web browser, you can live stream audio to other people using just a URL. This post is a step-by-step guide which shows you how. We use WebAudio and LiveKit’s WebRTC stack to build a real-time application for listening to music with your friends. Check out the full code here.

Remember turntable.fm? It was is this social DJ platform where users could join virtual rooms and listen to music together. When it launched in 2011 it spread like wildfire — at Twitter’s TGIF events, we had it up on a big projector screen and employees took turns DJing.

Turntable used polling requests to synchronization and either Flash or a hidden YouTube player for streaming audio. How might we build its core feature—listening to music together in real-time—if we did it today?

We’ll leverage a couple modern browser technologies to make this happen:

- WebRTC is a versatile set of networking APIs which can be used to build a wide range of real-time applications. It’s also the only standardized way of getting a UDP socket in a browser, which is useful when streaming media with low latency.

- WebAudio is a high-level JavaScript API for processing and synthesizing audio in web applications.

Typically WebRTC is used to share webcam and/or microphone streams with others in real-time, but we can use WebAudio to publish an audio stream from any source, such as a URL.

Before diving into the code, note that the techniques here can be used with any WebRTC stack, but we use LiveKit to greatly simplify things. To do the same, follow this mini tutorial to set up a project.

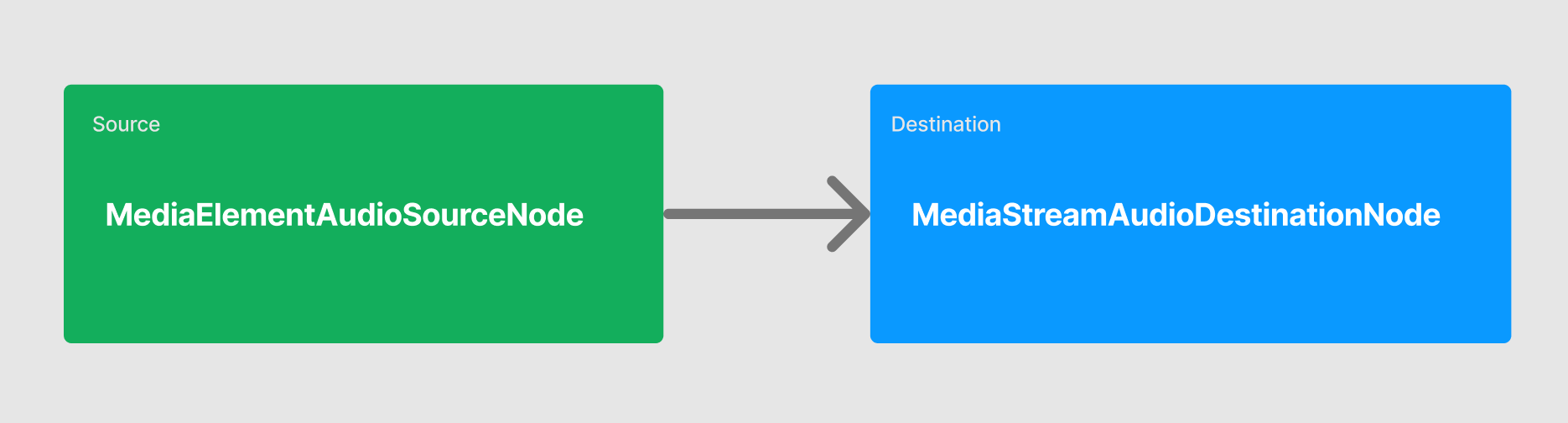

Using the MediaElementAudioSourceNode and MediaStreamAudioDestinationNode

WebAudio works on a concept of nodes, which can be sources, modifiers, or destinations of audio data. These nodes are linked together to form an audio processing graph. Audio data enters a node as input, flows through the graph, being processed by each node along the way, ultimately resulting in the output audio.

You can put together some pretty complex audio processing workflows with WebAudio, but our use case is simple:

What we’ll do is use an <audio> element as a MediaElementAudioSourceNode input and convert it to a MediaStreamTrack (via a MediaStreamAudioDestinationNode) output. We can then publish the resulting output as a WebRTC audio track. Let’s do this in a React component:

export const AudioURLPlayback = ({url}: AudioURLPlaybackProps) => {

const audioElRef = useRef<HTMLAudioElement>()

const audioCtxRef = useRef(new AudioContext())

useEffect(() => {

// ...

let audioSrc = audioCtxRef.current.createMediaElementSource(audioElRef.current),

audioDest = audioCtxRef.current.createMediaStreamDestination()

audioSrc.connect(audioDest)

}, [])

return <audio ref={audioElRef}

preload="auto"

url={url}

muted="false"

loop="true"

autoplay="true" />

}Publishing a MediaStreamTrack over a WebRTC session

With our simple WebAudio node graph wired up, we can grab the MediaStreamTrack as output from it and publish the stream to other listeners connected to the same WebRTC session:

import { Track } from "livekit-client"

import { useLocalParticipant } from "@livekit/components-react"

export const AudioURLPlayback = ({url}: AudioURLPlaybackProps) => {

// ...

const { localParticipant } = useLocalParticipant()

useEffect(() => {

// ...

let publishedTrack = audioDest.stream.getAudioTracks()[0]

localParticipant.publishTrack(publishedTrack, {

name: "audio_from_url",

source: Track.Source.Unknown

})

}, [])

// ...

}Starting a WebRTC session

Now that we have code which pulls audio from a URL into a WebRTC audio track and publishes it in a live session, all that’s left is some boilerplate to create the session:

import { LiveKitRoom } from "@livekit/components-react"

import { AudioURLPlayback } from "./your_component_path"

type AudioURLPlaybackProps { url: string | null }

export const Room = ({ token, wsUrl, audioUrl }: RoomProps) => {

return (

<LiveKitRoom token={token} serverUrl={wsUrl} connect={true}>

<AudioURLPlayback url={audioUrl} />

</LiveKitRoom>

)

}And, boom! 💥 That’s really all there is to it. If you take this code and run it in a couple browser tabs (note: make sure only one user is publishing a valid audio URL), you should hear it playing back from both instances. We use this exact technique in a spatial audio demo we published earlier:

Here’s the full code, including some boilerplate and parts we edited out for clarity:

import { useEffect, useRef, useCallback } from "react";

import { Track } from "livekit-client";

import { useLocalParticipant } from "@livekit/components-react";

export const AudioURLPlayback = ({url}: AudioURLPlaybackProps) => {

const audioElContainerRef = useRef<HTMLDivElement>();

const audioElRef = useRef<HTMLAudioElement>();

const audioSourceNodeRef = useRef<MediaElementAudioSourceNode | null>(null);

const audioContextRef = useRef(new AudioContext());

const audioDestinationNodeRef = useRef<MediaStreamAudioDestinationNode | null>(null);

const publishedTrack = useRef<MediaStreamTrack | null>(null);

const { localParticipant } = useLocalParticipant();

const cleanup = useCallback(() => {

if(publishedTrack.current) {

localParticipant.unpublishTrack(publishedTrack.current);

publishedTrack.current = null;

}

if(audioSourceNodeRef.current) {

audioSourceNodeRef.current.disconnect();

audioSourceNodeRef.current = null;

}

if (audioDestinationNodeRef.current) {

audioDestinationNodeRef.current.disconnect();

audioDestinationNodeRef.current = null;

}

if (audioElRef.current) {

audioElRef.current.pause();

audioElRef.current.remove();

audioElRef.current = null;

}

}, []);

useEffect(() => {

if(!url) cleanup();

// Create audio source and store it

audioSourceNodeRef.current = audioContextRef.current.createMediaElementSource(audioElRef.current);

// Connect audio source node to a MediaStreamAudioDestinationNode

audioDestinationNodeRef.current = audioContextRef.current.createMediaStreamDestination();

audioSourceNodeRef.current.connect(audioDestinationNodeRef.current);

// Publish audio to LiveKit room

publishedTrack.current = audioDestinationNodeRef.current.stream.getAudioTracks()[0]

localParticipant.publishTrack(publishedTrack.current, {

name: "audio_from_url",

source: Track.Source.Unknown,

});

return cleanup();

}, [cleanup]);

return <audio ref={audioElRef}

preload="auto"

url={url}

muted="false"

loop="true"

autoplay="true" />

}import { LiveKitRoom } from "@livekit/components-react";

import { AudioURLPlayback } from "@/components/AudioURLPlayback";

type UrlPlaybackProps {

url: string | null

}

type RoomProps {

token: string

wsUrl: string

}

export const Room = ({token, wsUrl}: RoomProps) => {

// set this to whatever you like

const [audioUrl, setAudioUrl] = useState<string | null>(null);

return (

<LiveKitRoom token={token} serverUrl={wsUrl} connect={true}>

<AudioURLPlayback url={audioUrl} />

</LiveKitRoom>

)

}Builders gonna build

You should now have everything you need to publish arbitrary media in a WebRTC session. If you have any feedback on this post or end up building something cool using or inspired by it, pop into the LiveKit Slack community and tell us. We love to hear from folks!