LiveKit coming to React Native!

Increasingly, developers are thinking multi-platform and how to get more done with fewer resources and maintenance overhead. React developers, in particular, naturally consider ReactNative as an entry point into mobile development. Since we launched LiveKit last summer, React Native has consistently been the most requested platform for us to support. The wait is over: today you can start using a beta release of our React Native SDK.

Like our Unity WebGL and React SDKs, this one is also built atop our battle-tested JavaScript SDK and ships with the same hooks found in @livekit/react-core.

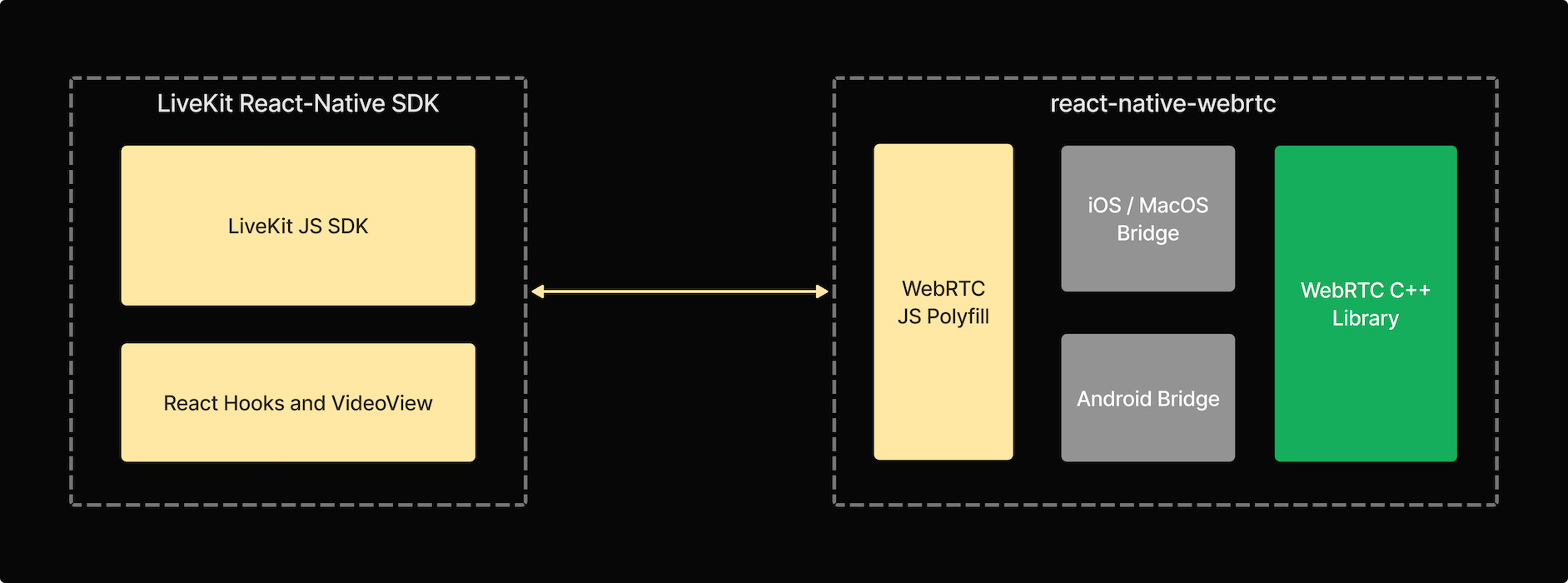

WebRTC on React Native

You may be thinking, if React Native is executing JavaScript, why couldn't we simply call into our JS SDK directly?

This is because our JS SDK leverages WebRTC APIs exposed through all major web browsers. While the APIs are accessible to JavaScript applications, the underlying implementation is part of the browser itself, originating from the Chromium project. React Native's runtime doesn't include these APIs, so we must provide our own implementation.

Fortunately, there's already an excellent implementation of WebRTC for react-native: react-native-webrtc. It packages native WebRTC libraries from Chromium, and provides polyfills for WebRTC APIs by bridging to native wrapper code.

Usage

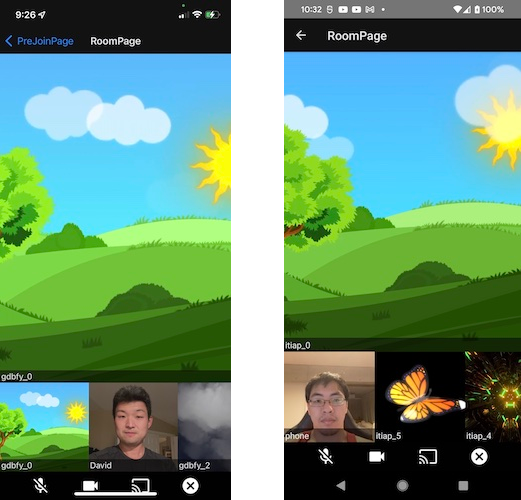

Let's dive into a quick example of building with LiveKit's React Native SDK. We also have a more full-featured example in the project's repo.

import { Participant, Room, Track } from 'livekit-client';

import { useRoom, VideoView } from 'livekit-react-native';

export const RoomPage = (url: string, token: string) => {

// Setup Room state

const [room,] = useState(() => new Room());

const { participants } = useRoom(room);

// Connect to Room

useEffect(() => {

room.connect(url, token, {})

return () => {

room.disconnect()

}

// Enable camera/microphone as needed

room.localParticipant.setMicrophoneEnabled(true);

room.localParticipant.setCameraEnabled(true);

}, [url, token, room]);

// Display the video of the first participant.

const videoView = participants.length > 0 && (

<VideoView

style={{flex:1, width:"100%"}}

videoTrack={participants[0].getTrack(Track.Source.Camera)?.videoTrack}

/>

);

return videoView

}The above snippet will get you connected to a room and streaming audio and video from your device, while receiving the same from other participants. The provided VideoView component handles rendering any video streams; just pass in the specific track from a participant you want to see. Audio is automatically handled and routed to your device's audio output.

Over the next few weeks, we'll be adding some bells and whistles to the React Native SDK. Things like adaptive stream and dynamic broadcast, which puts managing bandwidth–especially in larger rooms–on autopilot. We're stoked that developers can now write code once and ship rich, real-time audio and video experiences across both iOS and Android.

Give our SDK a try and tell us about what you're building with it! We'd love to help. You can always find us and other builders hangin' in our developer community. 👋