Bringing Zoom's end-to-end optimizations to WebRTC

When we started LiveKit, our aim was to build an end-to-end, open source WebRTC stack accessible to all. After 20 months and nearly 1000 commits, we're releasing version 1.0 of LiveKit. This also includes 1.0 releases for these client SDKs:

- JS 1.0

- Swift 1.0 (iOS and MacOS)

- Kotlin 1.0 (Android)

- Flutter 1.0

- React Core 1.0 and React Components 1.0

- Unity Web 1.0

In this post we'll dive deeper into end-to-end streaming optimizations, a particularly exciting aspect of LiveKit 1.0. WebRTC-based conferencing software typically struggles with meetings having more than a mere handful of participants. Zoom–using a custom protocol–has done an incredible job at scaling; it always works, despite sub-optimal network conditions and large numbers of participants on-screen. This is primarily accomplished through efficient use of bandwidth.

Most quality and performance issues in real-time communications, whether conferencing or cloud gaming, come from using more bandwidth than a network can sustain. A router drops packets when it's unable to keep up with the delivery rate, leading to stuttering video and/or robotic-sounding audio.

Solving these problems as the number of participants grows is tricky, and requires tight coordination between client and server. With our open signaling protocol for client-server communication, we're able to employ similar techniques as Zoom, but using WebRTC.

Adaptive stream

How can you fetch 25 HD videos simultaneously? The short answer is: you don’t. Ignoring that most clients lack the compute to decode that many 720p streams, few networks can steadily pull 50Mbps of data. To handle that many publishers, we have to reduce the amount of bandwidth allocated to each one. We can do so by reducing resolution and frame rate, but by how much? There's a balance to strike between performance and perceived quality.

As more participants join a meeting, on-screen video elements get smaller. A smaller element is more tolerant of a lower resolution image, which requires a fraction of the original bitrate sans reduced visual acuity.

LiveKit leverages two key features to automatically reduce bandwidth utilization as participants scale up. The first is simulcast, which allows each track to be simultaneously published in multiple encodings. Simulcast is a critical technique in scaling WebRTC and enabled by default across all LiveKit client SDKs.

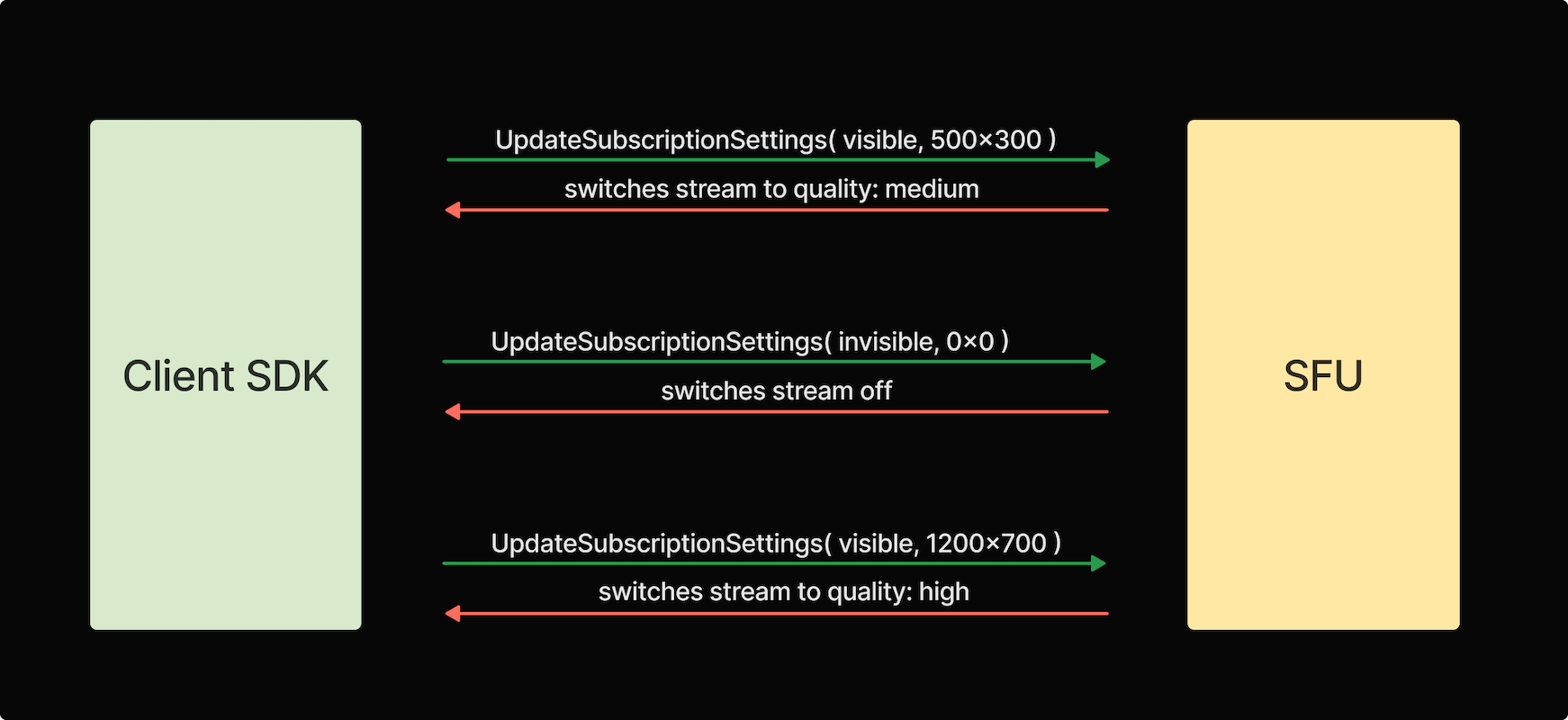

The second technique is adaptive stream, a subscriber-side feature which automatically monitors the visibility and size of any video element with a track attached. When the size of a managed element changes, our client SDK notifies the server, which responds by henceforth sending the closest-matching simulcast layer for that element's new dimensions.

If the same element is hidden, fully occluded, offscreen or otherwise invisible, the client informs the server, which pauses the associated track until the element's visibility is restored. For example, if a user subscribes to 50 tracks and just three are visible, they'd only receive video data for those three tracks.

What if a track is attached to multiple video elements? We send the resolution appropriate for the largest among them, thus all elements are rendered in high fidelity.

As a room grows, the bandwidth savings from adaptive stream are even more pronounced. Here's a 25-person meeting–ordinarily pulling down 45Mbps–consuming just 4Mbps, a 92% reduction in bandwidth usage!

Detecting visibility and size changes varies by platform. In our JavaScript SDK, it’s done via ResizeObserver and IntersectionObserver.

To enable adaptive stream, simply set a boolean when creating the Room object:

const room = new Room({

adaptiveStream: true,

});Dynamic broadcast

Dynamic broadcast is a publisher-side feature that seeks to conserve your upstream bandwidth based on how or if it's being consumed by subscribers. By default, simulcast will publish three layers in low, medium and high resolutions. For a large meeting where you're in the audience, visible to all other participants as only a small video element, publishing medium or high layers would be wasteful. If you were designated to the second or third "page" of participants, odds are nobody is viewing your stream, obviating the need to publish video, at all.

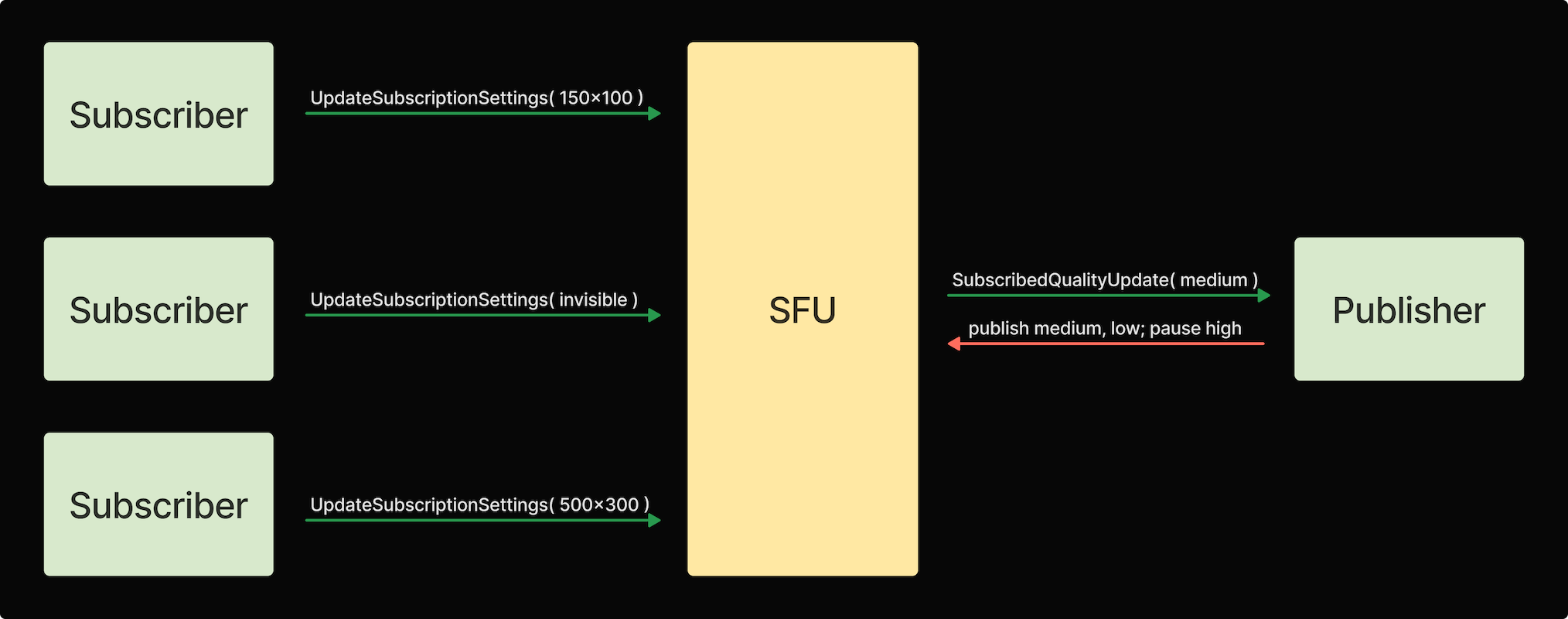

This is the idea behind dynamic broadcast: automatically monitor how and how many subscribers are consuming a publisher's video and adjust upload characteristics based on these signals.

For each track, LiveKit aggregates subscriber information and notifies the publisher of the maximum quality they need to publish at any given moment. Our client SDK handles these messages and pauses or resumes each individual simulcast layer as needed. When no one is subscribed to a particular track, the publisher is instructed to stop publishing entirely!

Both, adaptive stream and dynamic broadcast allow a developer to build dynamic video applications without consternation for how interface design or user interaction might impact video quality. It allows us to request and fetch the minimum bits necessary for high-quality rendering and helps with scaling to very large sessions.

Congestion control

Network connectivity and congestion is unpredictable, especially with home and public WiFi. One of your users will inevitably encounter a period of congestion and ensuring their conversation flows smoothly is a difficult problem. When it occurs, there are a couple clear indicators:

- It takes longer to transmit packets from source to destination

- Packet loss increases

Without mitigation, these effects are particularly catastrophic to real-time video and audio: the former stutters or freezes as frames take longer to arrive or can't be completely decoded, while audio gets choppy or distorted.

To deal with network congestion, we need to transmit data below the available capacity of the network. How do we determine that capacity? There's a few well-researched techniques which yield good estimates. We'll also need to periodically update our estimates as network conditions are dynamic and can change quickly, especially on mobile. Armed with this information, the question becomes, how do we temper the amount of data we're sending?

For a typical WebRTC client that encodes media, it would instruct the encoder to start encoding at a specific bitrate as determined by the bandwidth estimator.

On the server (SFU), however, things are much more complicated for two reasons:

- It does not control the bitrate of the streams it's forwarding.

- It must coordinate all streams a user asks to receive.

Stream allocator

We built a controller called StreamAllocator to handle these server-side challenges. Its job is to take an available bandwidth estimate, and decide how we should forward data to a subscriber. For example, if a user subscribes to ten tracks, each publishing 2Mbps, with only 5Mbps of available bandwidth, how might we send everything down?

If the tracks are simulcasted, our job is slightly easier. Depending on the dimensions of video elements on the receiving client–a proxy for priority–we have the option of dropping to lower-bitrate layers for some or all of the tracks. In extreme cases, however, we may decide to prioritize audio or pause low priority video streams, ensuring high priority ones can be received smoothly.

At a high level, our stream allocator performs the following:

- Determine bandwidth requirements for each track

- Rank tracks based on priority (audio first, then screen share, then video)

- Distribute bandwidth amongst tracks according to policy (i.e. it's better to show low resolution layers for every track, than having some tracks appear frozen)

- Periodically discover if additional bandwidth have become available and repeat

Audio

While audio is much less data (by default, Opus publishes around 20kbps) than video, subscribing to many tracks adds up. Fortunately, WebRTC supports Opus DTX (Discontinuous Transmission), which when enabled, significantly reduces the bitrate when microphone input is quiet or silent. In our testing, 20kbps streams drop to 1kbps under the aforementioned conditions. Even in large conferences, DTX largely eliminates scaling concerns with respect to audio as there are seldom more than a few people speaking at once.

LiveKit enables DTX by default, but you may disable it during track publication.

Adaptive stream, dynamic broadcast, congestion control and DTX all work in concert and behind-the-scenes via deep integration between LiveKit's server and client SDKs. As a developer, any application you build on our infrastructure will benefit from the same techniques Zoom uses to deliver high quality video and audio to its users.

LiveKit 1.0 is a big milestone for the core team, but this is only the beginning. We've got some interesting new projects, features, and improvements hitting the public repos soon. If working on this stuff excites you, please join our community and let's build together.