Decentraland's Catalyst: using WebRTC to interact in the metaverse

Every decentralized platform builder faces this problem: which components should be decentralized, and to what extent?

The metaverse project Decentraland tackled this when their original peer-to-peer messaging transport hit performance bottlenecks, which limited their users’ ability to chat and interact in real time. In this post, we’ll walk through how they solved the performance problem while maintaining operator independence.

For those who aren't familiar, Decentraland is a decentralized virtual world platform, built on the Ethereum blockchain, allowing anyone to create, explore and monetize content and applications like virtual land, buildings, items, or mini-games. Anyone can run a Decentraland node, which is like a game server. Every node sees the same world instance (buildings, etc) but users on a node can only see and interact with others on the same node. (The design is pretty similar to World of Warcraft’s “shards”.)

Any multiplayer world needs a way to share user interactions and state changes with nearby players. Decentraland does this over WebRTC data channels: push-to-talk voice chat, player movement, chat messages, and world interactions (like opening and closing doors) are all shared over data channels.

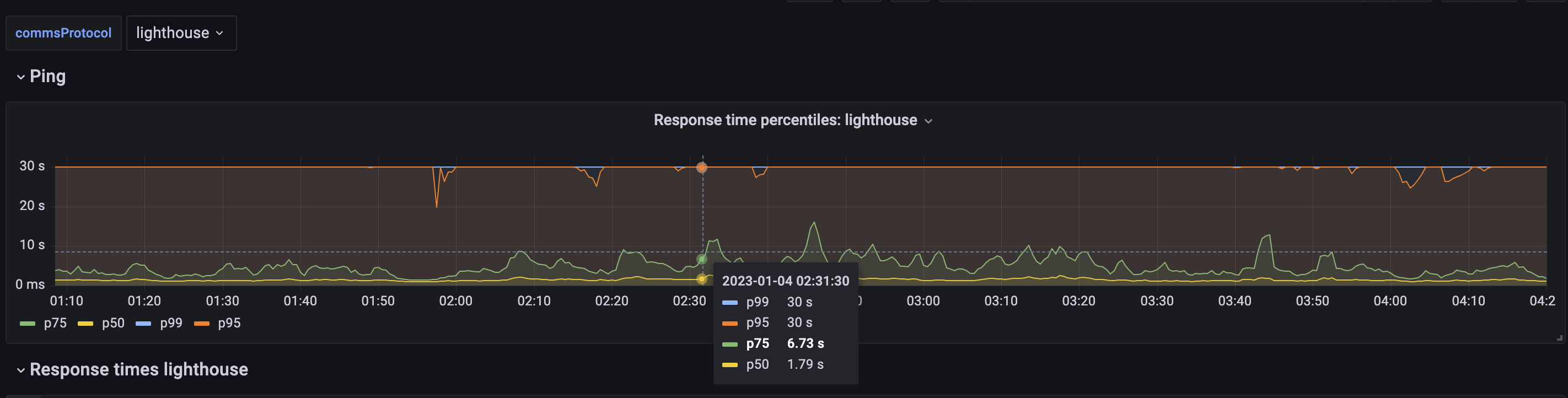

Initially, all these state changes happened over peer-to-peer WebRTC connections. But this architecture quickly ran into scaling problems: peer-to-peer WebRTC does not scale beyond connections to 4 peers, and players frequently interact with larger groups and crowds. To support interactions in a large group, the Decentraland team first tried propagating data across multiple peer-to-peer hops but latency was too high. For a 100-user cluster, the client p75 ping time was 6–7 seconds.

To solve this, the team built a transport layer abstraction ("Catalyst") to support multiple WebRTC transport options. In addition to their existing peer-to-peer transport, node operators can either host their own instance of LiveKit’s open-source server, or use LiveKit Cloud and leave the server maintenance to us. Any node operator can switch between transports with a simple configuration change. Since the release of Catalyst, all Foundation and Community nodes have opted to run on LiveKit.

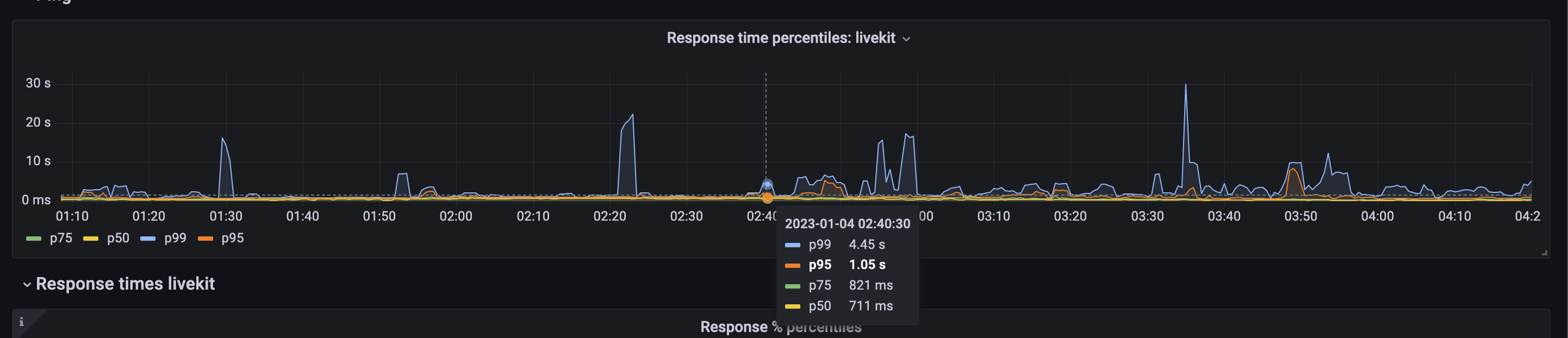

The results were dramatic. Quantitatively, clients’ p75 ping time dropped to 800 milliseconds. Voice chat, now exchanged between users as WebRTC audio tracks, is delivered in real time. The improved performance also has had a profound qualitative effect on user behavior, producing spontaneous interactions, live audio conversations, and events where users sing karaoke and happy birthday.

Much of the dramatic drop in latency relies on LiveKit Cloud’s global mesh network. Each Decentraland user connects to the LiveKit server geographically closest to them and data is exchanged between users over the private internet backbone — think of it like a CDN, but for real-time media.

To return to the problem posed at the top of this post, LiveKit’s open-source core allows Decentraland to deploy a mix of transport implementations, both decentralized and centralized. Decentraland node operators can decide for themselves whether they want to use LiveKit Cloud, host their own instance of LiveKit, or continue using peer-to-peer transport.

You can read more technical details about Decentraland’s WebRTC architecture in their Architecture Decision Record, or create your own WebRTC project using LiveKit Cloud. And feel free to say hi in our Slack community — we always love to hear what people are building with LiveKit!