Announcing LiveKit Cloud

I recently spoke with an engineer whose company transforms agricultural vehicles like tractors into self-driving, autonomous robots for tasks like mowing, spraying and weeding. Seriously, this is straight out of Interstellar:

Sometimes a farmer needs to take control of the machine—often deployed in a rural area with spotty internet access—so this company installed LiveKit on an Nvidia Jetson running onboard the vehicle. A farmer can simply take out their phone and using LiveKit, observe its behavior, modify its planned route or remotely pilot the vehicle in real-time. This is one of those 🤯 use-cases that illustrates the power and flexibility of open source software.

Over the past year, we’ve had many conversations with developers like the one above, to understand why and how they were using LiveKit and how we can make it better. There are now over 1000 projects and companies using LiveKit to power real-time video and audio features in their applications. They span a wide range of use-cases: conferencing, livestreaming, metaverse or video games, robotics, collaboration, telephony, and logistics. Most developers had tried a handful of commercial offerings, only to find them unreliable, lacking support and observability, or prohibitively expensive. LiveKit was uniquely positioned as a free, fully open-source stack with more features, SDK integrations and documentation than even commercial options.

We also learned that while many folks were successfully running LiveKit in production, operating WebRTC infrastructure at scale is hard. Many teams would rather focus on product-market fit instead of wrestling with Kubernetes. Others want a multi-home solution, allowing a session to scale beyond a single node in a data center. For all these developers, we wanted to make it simple to go from proof-of-concept to production with LiveKit.

Thus, after a year of building and a half-year-long closed beta with 50 customers, we’re excited to unveil LiveKit Cloud.

LiveKit Cloud (”Cloud”) is a fully-managed, globally distributed WebRTC platform that shortens the process of integrating, deploying and scaling LiveKit to a few clicks. We had just one imperative when we sat down to design this system: no developer would be locked into our platform. An open source user should be able to migrate to Cloud, and a Cloud customer could switch to self-hosted at any moment.

We addressed this by building Cloud on top of LiveKit’s open-source (OSS) stack. Cloud uses the same SFU, supports the same APIs and SDKs, and has all the same features as LiveKit Open Source. You can effortlessly switch between the two without changing a line of code. This eliminates the vendor or platform risks inherent with other commercial WebRTC providers. There’s three aspects that make Cloud unique:

🌐 Cloud is built for massive scale

You may have noticed real-time experiences like all-hands meetings or livestreams keep getting larger. Developers have told us how their products hit scaling walls with traditional media servers (and CPaaS providers) — most impose a limit of less than 200 participants per session. This is due to a single-home architecture: a session is hosted on a single server somewhere in the world, and all audio and video from every participant is routed through that server, regardless of where participants are located.

While a single-home architecture is easier to build, it can’t scale beyond the capacity of a single server. At some point, that server’s compute or network capacity is reached. There are also quality-of-experience drawbacks. For example, if one user joins a session from New York, and another from Tokyo, at least one of them will have to send their data over the public internet to a server that's far away.

For Cloud, we created a multi-home architecture using a custom session orchestration layer we built around LiveKit's SFU. Each user connects to an edge server that's closest to them, and these servers interconnect over backbone fiber connections to form an SFU mesh. We architected this setup to support massive scale, ultra-low latency, and rock-solid reliability. The version we’re launching today supports up to 100,000 people together, sharing an experience, with less than 100ms of latency between them. The infrastructure behind it runs across a blend of cloud providers and is fault-tolerant against node, region, and even entire provider outages.

To achieve this feat of engineering, we had to approach the problem from first principles. In this post, my cofounder, David, tells you about how we did it.

💲 Cloud adopts a disruptive pricing model

The participant minute is the industry-wide way developers are metered and charged for using cloud-based WebRTC services. It works like this: for every minute a participant is in a session, regardless of whether they’re transmitting video, audio, or neither, that counts as a billable participant minute. It’s simple to explain, but the complexity comes from trying to predict your costs. The price-per-participant-minute has to factor in that some folks may transmit 1080p video while others have their cameras off. It’s like a gym membership: the gym makes money from everyone who pays and never shows up.

There are specific issues with this model, but at a high-level, it severely constrains the types of video and/or audio applications one might create — certain, especially large-scale, use-cases become so prohibitively expensive, it’s impractical to build them. We took a different tack when it came to pricing. LiveKit Cloud adopts a cost-based model, metering your application by the amount of data it transfers (bandwidth) and media processing it performs (transcoding).

Cost-based pricing enables new types of applications, particularly around livestreaming and spatial computing to be economically viable. I’ve written in depth about our model and philosophy around pricing here.

📈 Cloud offers unparalleled observability for free

As with Cloud’s technology and pricing, we chose to rethink real-time video analytics from the ground up.

The default expectation with video and audio infrastructure is that it just works. If something goes wrong, the first thing a developer wants to know is: when did it happen? The second, is why. The answers to these questions typically lie deep in the network stack and depend on things like the device a user’s connecting with, where they’re connecting from, what else they’re doing on their device, the type of router they’re connected to — heck, sometimes the issue is they’re standing next to a running microwave. I’m not joking.

Usually the end-users of the application know why things have gone awry. Like, when you’re on a Zoom call and everyone freezes, you check if you’re still connected to the internet. For the developer of that application though, it’s harder to understand by only looking at quantitative data. This became our barometer as we designed our data product: could a developer looking solely at our telemetry data grok the end-to-end experience for any participant?

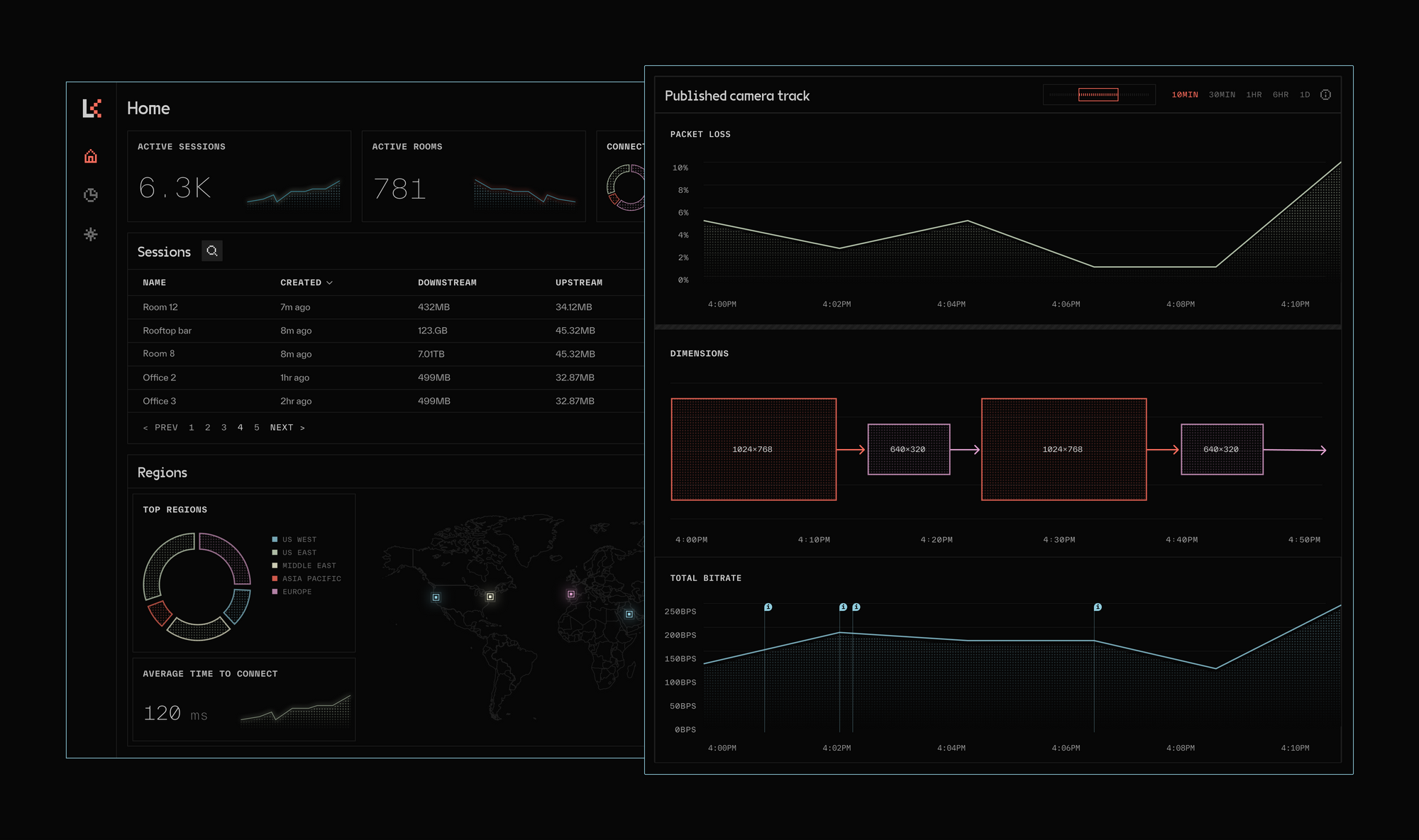

We’ve built the most detailed real-time media analytics solution out there.Developers can drill all the way down to track-level statistics for each participant’s published and subscribed streams, to view information like bitrate, frame rate, packet loss, and simulcast layer changes. Our system is tightly integrated with LiveKit’s unique features like adaptive stream and dynamic broadcast, giving you a complete picture of the interactions between server and client. We annotate graphs with markers when Cloud detects things went wrong for a particular user, and add flags (with explainers!) for when the system is making layer switches or adjusting stream attributes based on changes to client’s estimated bandwidth.

While access to Cloud’s analytics dashboards are completely free for all projects, our server-side analytics and telemetry collection endpoint is also built into LiveKit’s open-source SFU. Any developer self-hosting LiveKit who’s interested in exporting the same data to their own system, for analysis or building custom dashboards can easily hook in.

An amazing level of effort from the LiveKit team has gone into LiveKit Cloud. From a single product you get a massive-scale global network for real-time media, a novel pricing model which unlocks entire classes of new apps, and the most detailed real-time video analytics system ever built. We’re excited for you to give it a whirl and let us know what you think!

P.S. Thank you Theo, Raja, Shishir, DC, Brian, Jonas, Lukas, Herzog, DL, Mark, Noah, Don, Jie, Duan, Hiroshi, Benjamin, DZ, Dan, and Mat. You’ve made something incredible. I may write the blog posts, but you all deserve the credit. ❤️